- What Is Artificial Intelligence (AI)?

- What Is AI Security Posture Management (AI-SPM)?

- What Is an AI Worm?

- What Is Machine Learning (ML)?

- What Is Explainable AI (XAI)?

- What Is AI Governance?

- What Is the AI Development Lifecycle?

-

AI Concepts DevOps and SecOps Need to Know

- Foundational AI and ML Concepts and Their Impact on Security

- Learning and Adaptation Techniques

- Decision-Making Frameworks

- Logic and Reasoning

- Perception and Cognition

- Probabilistic and Statistical Methods

- Neural Networks and Deep Learning

- Optimization and Evolutionary Computation

- Information Processing

- Advanced AI Technologies

- Evaluating and Maximizing Information Value

- AI Security Posture Management (AI-SPM)

- AI-SPM: Security Designed for Modern AI Use Cases

- Artificial Intelligence & Machine Learning Concepts FAQs

What Is AI Security? [Protecting Models, Data, and Trust]

AI security is the discipline of protecting artificial intelligence systems from threats that compromise their integrity, confidentiality, or reliability.

It safeguards data, models, and infrastructure across the AI lifecycle to prevent tampering, misuse, and unauthorized access.

Effective AI security ensures that AI systems operate as intended, remain trustworthy over time, and comply with emerging standards for safe and responsible use.

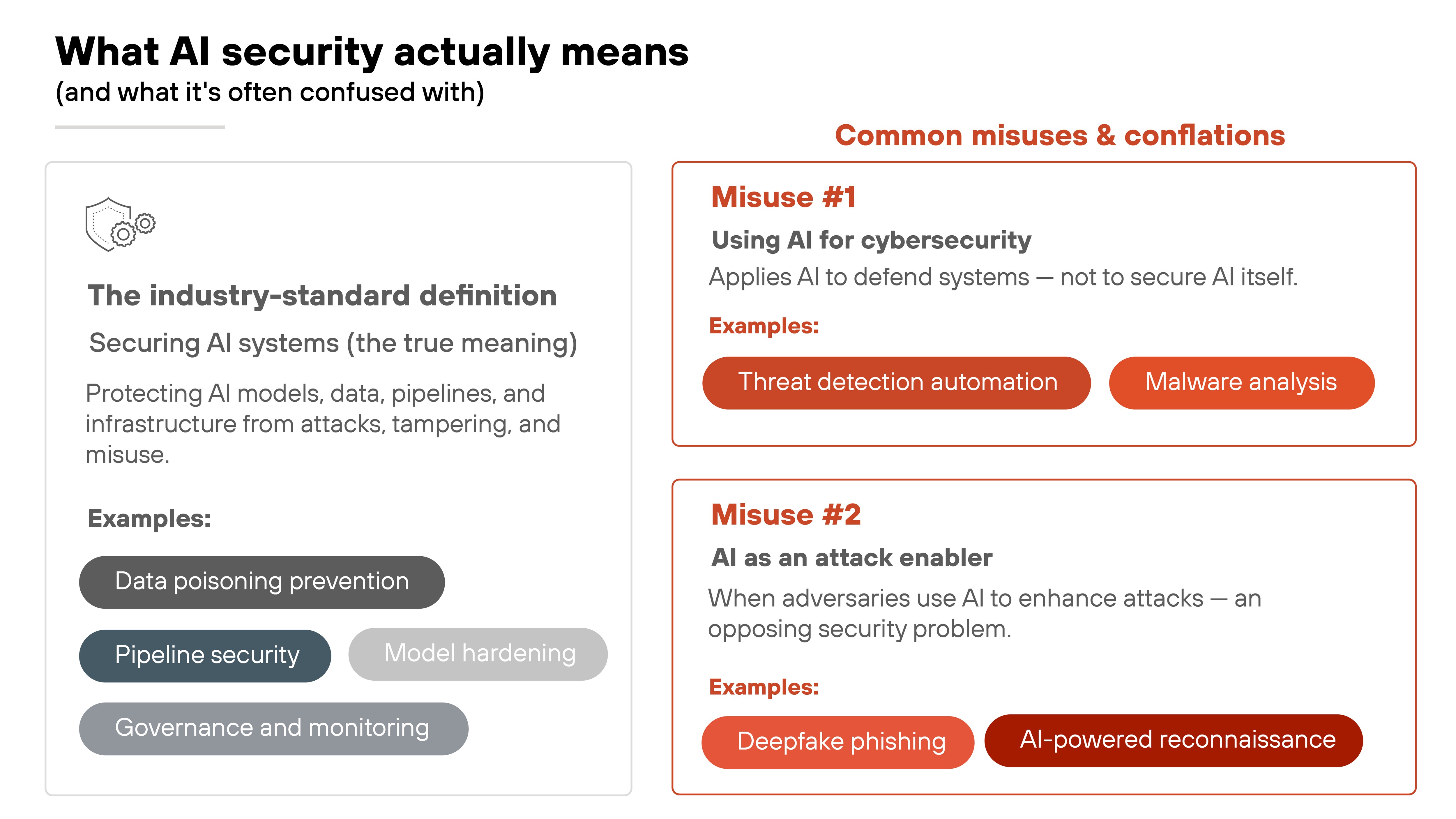

What does the industry really mean by “AI security”?

The phrase AI security gets used loosely. Some treat it as if it means any overlap between artificial intelligence and cybersecurity.

At its core, AI security refers first and foremost to securing AI systems — protecting models, data, pipelines, and infrastructure so they can operate safely and reliably. This is the foundation for trustworthy AI.

The term, however, is often confused because it gets used or referenced in at least three different contexts:

- Securing AI systems themselves — protecting data, models, pipelines, and deployments from attacks and misuse.

- Using AI for cybersecurity — applying machine learning or generative models to detect and respond to threats.

- AI as an attack enabler — where adversaries use AI to enhance phishing, malware, or other offensive tactics.

Confusion arises because all three are real. But they're not the same problem space.

In this article, we focus primarily on securing AI systems, while also clarifying how this differs from using AI in security products or confronting AI-powered threats.

- What Is Generative AI Security? [Explanation/Starter Guide]

- How Generative AI Is Used in Cybersecurity

What's driving today's focus on AI security?

AI has shifted from experimentation to widespread deployment.

In just a few years, models went from research labs and pilot projects to powering customer service, financial decisions, and healthcare tools.

Which means these systems now handle sensitive data–and influence outcomes that matter.

That change explains why AI security is suddenly a central concern.

Early adoption was about proving AI could work. Now the risks of poisoning training data, stealing models, or misusing outputs are tied directly to business disruption and public trust. What was once theoretical is now operational.

Industry momentum also plays a role.

Investment in generative AI accelerated adoption across every sector. Organizations plugged models into cloud services, APIs, and internal workflows at speed. And the result is a much larger attack surface than traditional software ever presented.

Regulation is catching up at the same time.

The EU's AI Act, U.S. federal guidance (e.g., NIST's AI Risk Management Framework), and standards such as ISO/IEC 42001 are putting formal expectations around governance and security. So compliance pressure now amplifies the business need to secure AI systems.

In short: rapid adoption, visible risk, and new regulatory demands collided. That's what makes AI security one of the industry's most pressing priorities today.

Where do AI systems face the most security risk?

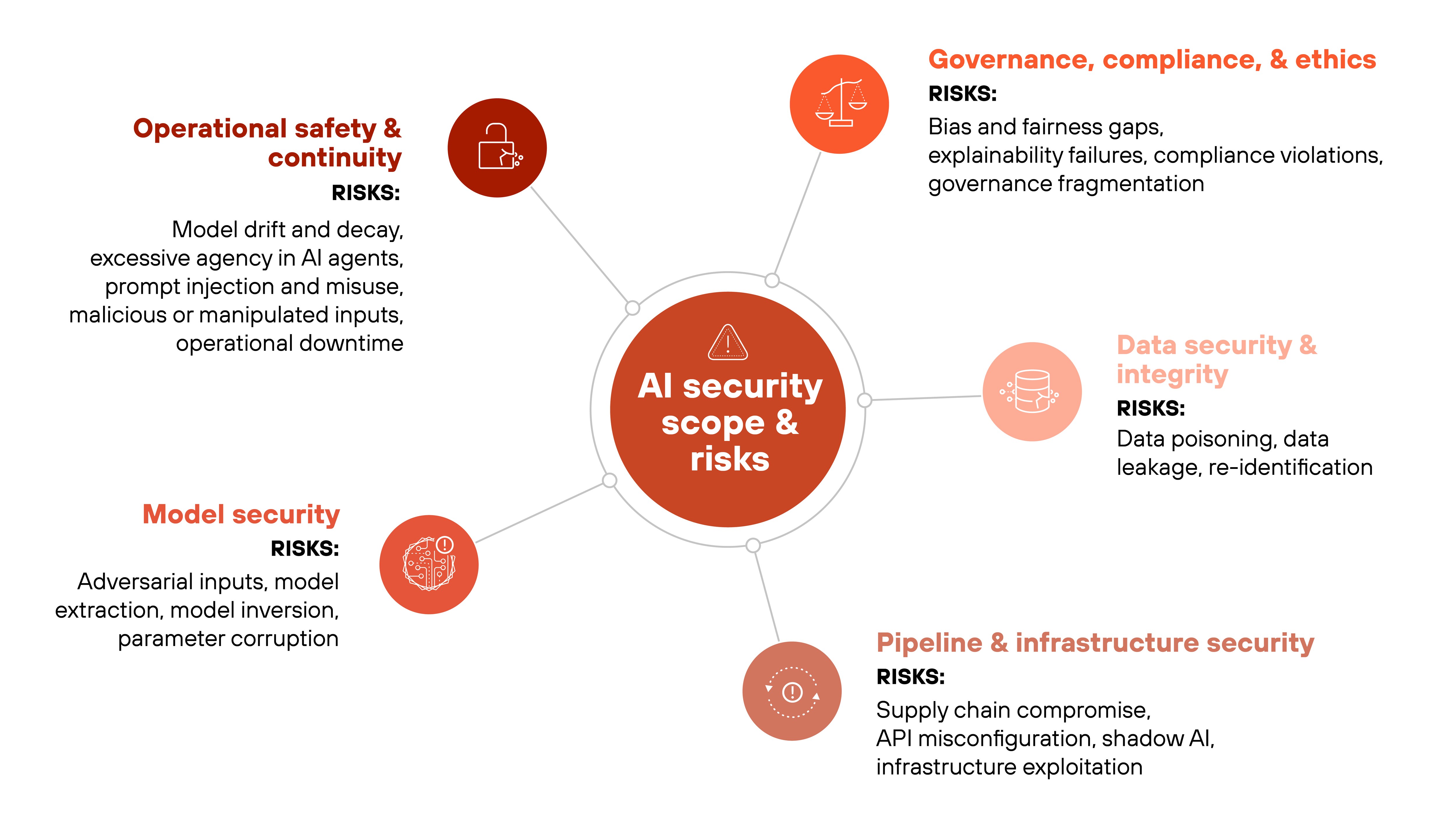

With the drivers clear, the next question is scope: what exactly falls under AI security, and where do organizations need to focus their defenses?

AI security spans more than one layer, covering:

- Data that feeds AI systems

- Models that process and generate outputs

- Infrastructure that stores, transports, and executes workloads

- Policies and governance frameworks that ensure safe, accountable operation

Each area has distinct failure modes that attackers can exploit.

In other words, AI security is a broad discipline that protects both the technology and its use. So let's dig into what it really involves.

QUIZ: HOW STRONG IS YOUR AI APPLICATION SECURITY POSTURE?

Take our interactive quiz to identify and strengthen weak points.

Take quizData security and integrity

Data is the foundation of every AI system. It shapes how models learn, behave, and make decisions.

Consequently, any compromise to the data—whether during collection, storage, or use—can directly affect system reliability and trustworthiness.

AI security therefore includes strict controls to preserve the confidentiality, integrity, and availability of data throughout its lifecycle. These controls govern how data is sourced, labeled, validated, and protected against unauthorized access or manipulation.

Risks:

- Data poisoning: Insertion of malicious or misleading samples into training or fine-tuning datasets to alter model behavior.

- Data leakage: Unintended exposure of sensitive information through model outputs, logs, or shared datasets.

- Re-identification: Reconstruction of personal or proprietary information from anonymized or aggregated data, undermining privacy guarantees.

Model security

Models are the functional core of an AI system. They drive predictions and decisions. If the model is compromised, the entire system is.

Model security focuses on protecting that integrity. It involves safeguarding architectures, weights, and parameters from tampering, theft, and misuse — and ensuring that models behave consistently under real-world conditions.

Risks:

- Adversarial inputs: Crafted examples designed to deceive models into producing incorrect or unsafe outputs.

- Model extraction: Repeated querying that recreates a model's logic or parameters, exposing intellectual property.

- Model inversion: Attacks that reconstruct sensitive training data from outputs.

- Parameter corruption: Direct manipulation of weights that introduces backdoors or distorts behavior.

Pipeline and infrastructure security

AI systems depend on connected pipelines, APIs, and cloud environments to move data and deploy models.

Interconnection expands the attack surface because every dependency—from third-party libraries to storage buckets—can become a path to compromise.

Pipeline and infrastructure security focuses on protecting the supporting systems that enable AI to function — from code repositories to runtime environments. It ensures the integrity of data transfers, dependency chains, and execution layers across the AI lifecycle.

Risks:

- Supply chain compromise: Insertion of malicious code or components through third-party libraries, pre-trained models, or open-source dependencies.

- API misconfiguration: Exposure of endpoints that allows unauthorized access, injection, or data leakage.

- Shadow AI: Unsanctioned model deployments that operate outside formal governance, creating visibility and compliance gaps.

- Infrastructure exploitation: Attacks on containers, orchestration tools, or storage environments that disrupt operations or alter workflows.

Governance, compliance, and ethics

Governance defines accountability, applicable standards, and how systems stay compliant over time.

Effective governance aligns AI operations with frameworks such as NIST's AI RMF and ISO/IEC 42001, while meeting regulatory obligations under laws like the EU AI Act. It also ensures transparency, fairness, and ethical oversight across the AI lifecycle.

Risks:

- Bias and fairness gaps: Models trained on unbalanced data can perpetuate discrimination or skew outcomes.

- Explainability failures: Lack of interpretability makes it difficult to validate or contest model behavior, eroding accountability.

- Compliance violations: Insufficient documentation or weak auditing can breach data protection and AI-specific regulatory requirements.

- Governance fragmentation: Siloed teams and inconsistent policies leave blind spots in oversight and risk management.

Operational safety and continuity

Operational safety ensures AI systems remain stable, predictable, and secure after deployment. It focuses on maintaining reliability as models evolve, data shifts, and external conditions change.

The goal is to prevent performance degradation and maintain trust in live environments. Monitoring, anomaly detection, and recovery planning all support resilience when systems behave unexpectedly or fail under stress.

Risks:

- Model drift and decay: Gradual divergence from intended behavior as data or environments change.

- Excessive agency in AI agents: Excessive permissions or decision-making freedom in AI agents that act without sufficient oversight.

- Prompt injection and misuse: Malicious or manipulated inputs that alter model responses or leak sensitive data.

- Operational downtime: Interruptions or cascading failures that disrupt dependent systems or services.

FREE AI RISK ASSESSMENT

Get a complimentary vulnerability assessment of your AI ecosystem.

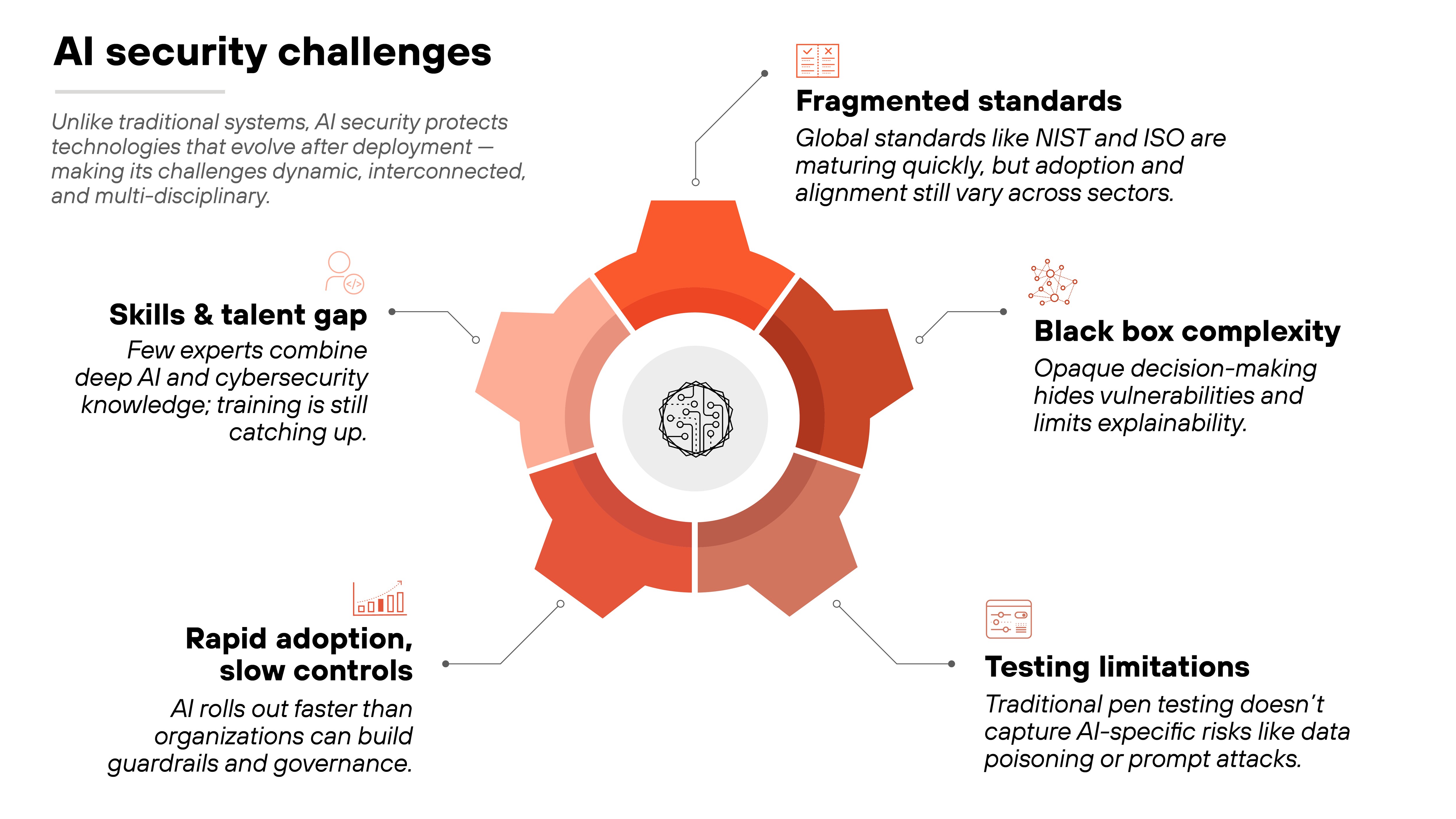

Claim assessmentWhat makes AI security uniquely challenging?

AI security introduces challenges that go beyond traditional cybersecurity. The systems it protects are dynamic, data-driven, and capable of evolving after deployment.

That creates new considerations. Security teams must account not only for infrastructure and code, but also for model behavior, data integrity, and human oversight. That leads to a discipline that spans technical, operational, and governance dimensions.

Plus, standards and tools for AI security are advancing quickly, but implementation remains uneven across industries. Many organizations are still aligning on how to test, validate, and monitor AI systems at scale.

The challenges are significant — but they're solvable. The security community is developing the frameworks, practices, and cross-functional expertise needed to make AI systems safer and more trustworthy.

Here's where those challenges come from.

Fragmented standard adoption

AI security standards are maturing quickly, but adoption remains uneven. Frameworks from NIST, ISO, and OWASP now provide structure, but no universally accepted baseline exists across sectors or regions.

As a result, organizations interpret requirements differently. Some align with ISO/IEC 42001 or NIST's AI RMF, while others emphasize model testing or governance. The outcome is a varied security posture that's difficult to benchmark or compare at scale.

Black box complexity

Most AI models operate as black boxes. Which means their internal decision-making is opaque, even to the teams that build them.

This makes it difficult to spot subtle vulnerabilities, confirm fairness, or explain why a system behaves the way it does. Because of that, risks can remain hidden until models fail under real-world conditions.

Testing limitations

Simulating adversaries isn't straightforward.

Traditional penetration testing doesn't apply well to AI because the attack surface includes data, prompts, and model weights. For example, testing for data poisoning requires not just code review but also monitoring of training pipelines because it's not visible in classic app-layer tests.

Without specialized tools and methods, organizations may miss entire classes of vulnerabilities.

Rapid adoption vs. slow controls

AI deployment is moving fast. Models are being plugged into products, services, and workflows faster than security controls can keep up.

Cloud APIs, shadow AI, and third-party tools all expand the attack surface before guardrails are fully in place. So security teams are often forced to retrofit protections after deployment rather than building them in from the start.

Talent and skills gap

Finally, there's a people problem. AI security still faces a workforce gap. A limited number of practitioners have deep expertise in both AI development and cybersecurity, which can make it difficult to evaluate risks, test for adversarial behavior, or apply emerging standards effectively.

It's worth noting: this is beginning to change. Universities, standards bodies, and industry leaders are rapidly expanding education and certification programs focused on trustworthy and secure AI.

The talent pipeline is growing—but the need for multidisciplinary expertise continues to outpace it for now.

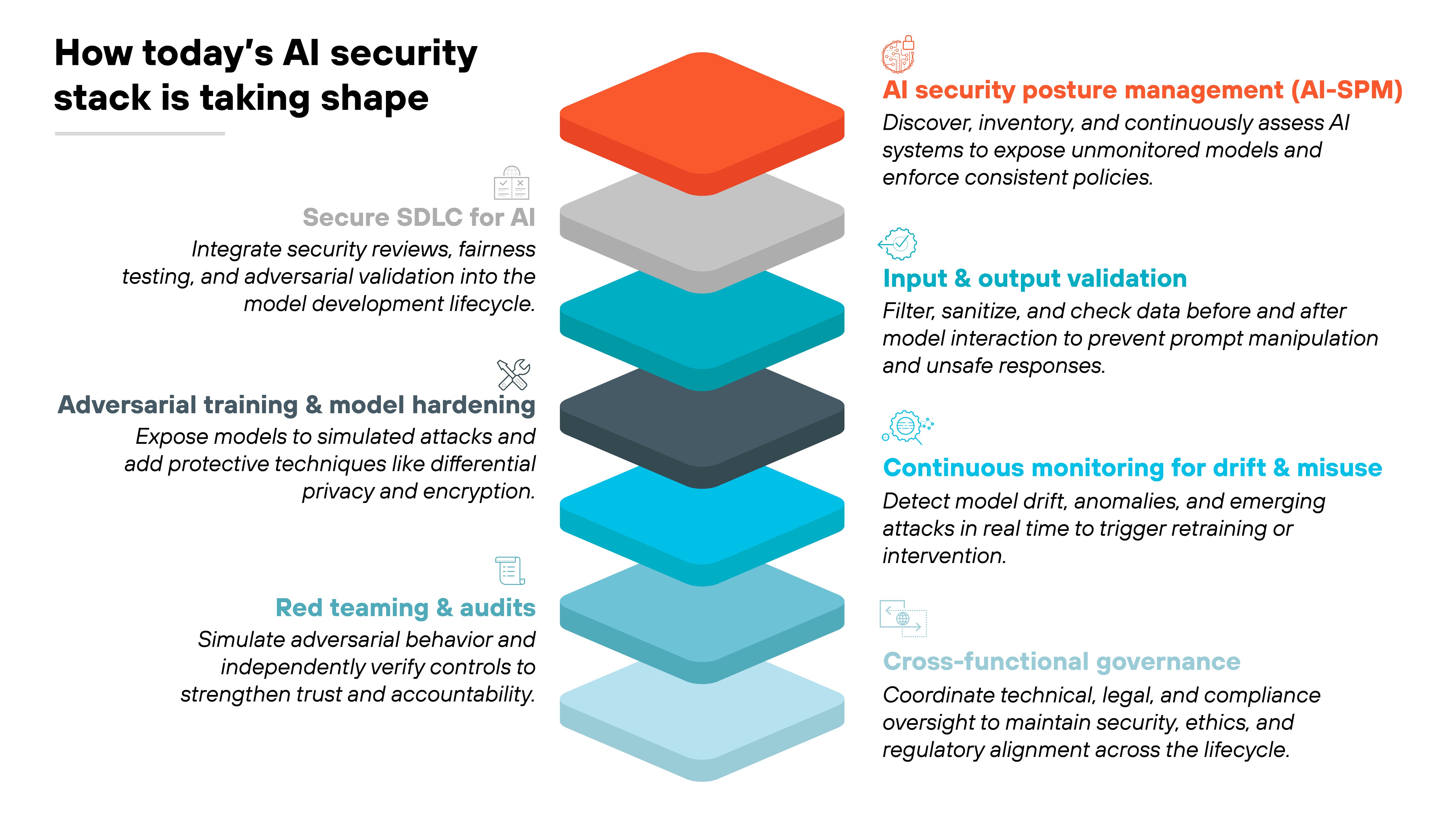

What approaches are emerging to secure AI systems?

As AI systems move from prototypes to production, security is evolving alongside them.

The focus is no longer just on protecting data or code. It's on securing entire lifecycles. That means embedding safeguards at every stage, from model design and training to deployment and monitoring.

In practice, the industry is converging on a set of complementary approaches. Some strengthen visibility and governance. Others harden models against manipulation or establish guardrails for safe use.

Together, these practices form the emerging playbook for managing AI risk with the same rigor as traditional cybersecurity.

AI security posture management (AI-SPM)

AI security posture management (AI-SPM) is emerging as a key approach for bringing visibility and control to AI systems. It gives organizations visibility into what models exist, where they run, how they interact with data, vulnerabilities, and misconfigurations.

That discovery process helps uncover shadow AI and unmonitored pipelines. With a full inventory, teams can track risks and enforce consistent controls.

Basically, AI-SPM establishes the baseline oversight needed before other defenses can work effectively.

DEMO: CORTEX AI-SPM

See for yourself how Cortex AI-SPM provides visibility across every AI model, application, and data pipeline.

Request demoSecure SDLC for AI

A secure software development lifecycle (SDLC) adapted for AI brings security into every stage of building and deploying models.

It means validating training data sources, reviewing pipelines for weaknesses, and testing for fairness or adversarial resilience before release. These steps reduce the chance that flaws or bias enter production.

Secure SDLC shifts AI security from afterthought to built-in discipline.

Input and output validation

AI expands the attack surface to both inputs and outputs. Malicious prompts, poisoned datasets, or unsafe responses can all create risks.

Input validation applies checks and filters before data reaches the model, while output validation ensures results comply with safety rules or policy guardrails.

Together, they help contain threats unique to AI that traditional software rarely faces.

Adversarial training and model hardening

Attackers can manipulate AI models with adversarial examples designed to fool predictions or leak training data. Adversarial training prepares models for this by exposing them to manipulated inputs during development.

Hardening adds protections such as differential privacy, encryption, or simplified architectures to reduce exposure. The goal is to make models more resilient to manipulation and theft.

Continuous monitoring for drift and misuse

AI systems change over time. Data shifts cause model drift, and attackers adapt their tactics.

Continuous monitoring tracks performance, bias, and anomalous behaviors so issues are caught early. This allows retraining, policy adjustment, or incident response before small problems escalate into failures.

Monitoring is critical for keeping AI reliable in production.

Red teaming and audit practices

Red teaming simulates how real attackers might interact with an AI system, from prompt injection to model extraction. It reveals weaknesses that static testing misses.

Audits add accountability by independently verifying that controls align with frameworks and regulations.

Together, red teaming and audits create a feedback loop that strengthens both defenses and trust.

Cross-functional governance

AI security requires coordination beyond technical teams.

Governance frameworks involve legal, compliance, and business leaders to ensure systems meet regulatory, ethical, and operational standards. This structure clarifies accountability, reduces gaps between security and compliance, and enforces consistent oversight.

Cross-functional governance ensures AI security is not just technical but organizational.

- AI Risk Management Frameworks: Everything You Need to Know

- What Is Explainable AI (XAI)?

- DSPM for AI: Navigating Data and AI Compliance Regulations

- How to Build a Generative AI Security Policy