- What Is Container Security?

-

Managing Permissions with Kubernetes RBAC

- Kubernetes RBAC Defined

- Why Is RBAC Important for Kubernetes Security?

- RBAC Roles and Permissions in Kubernetes

- How Kubernetes RBAC Works

- The Role of RBAC in Kubernetes Authorization

- Common RBAC Permissions Risks and Vulnerabilities

- Kubernetes RBAC Best Practices and Recommendations

- Kubernetes and RBAC FAQ

- Kubernetes: How to Implement AI-Powered Security

- What Is Container Runtime Security?

- What Is Kubernetes Security?

-

Multicloud Management with Al and Kubernetes

- Multicloud Kubernetes Defined

- How Does Kubernetes Facilitate Multicloud Management?

- Multicloud Management Using AI and Kubernetes

- Key AI and Kubernetes Capabilities

- Strategic Planning for Multicloud Management

- Steps to Manage Multiple Cloud Environments with AI and Kubernetes

- Multicloud Management Challenges

- Kubernetes Multicloud Management with AI FAQs

-

What Is Kubernetes?

- Kubernetes Explained

- Kubernetes Architecture

- Nodes: The Foundation

- Clusters

- Pods: The Basic Units of Deployment

- Kubelet

- Services: Networking in Kubernetes

- Volumes: Handling Persistent Storage

- Deployments in Kubernetes

- Kubernetes Automation and Capabilities

- Benefits of Kubernetes

- Kubernetes Vs. Docker

- Kubernetes FAQs

-

What Is Kubernetes Security Posture Management (KSPM)?

- Kubernetes Security Posture Management Explained

- What Is the Importance of KSPM?

- KSPM & the Four Cs

- Vulnerabilities Addressed with Kubernetes Security Posture Management

- How Does Kubernetes Security Posture Management Work?

- What Are the Key Components and Functions of an Effective KSPM Solution?

- KSPM Vs. CSPM

- Best Practices for KSPM

- KSPM Use Cases

- Kubernetes Security Posture Management (KSPM) FAQs

- What Is Orchestration Security?

- What Is Container Orchestration?

-

How to Secure Kubernetes Secrets and Sensitive Data

- Kubernetes Secrets Explained

- Importance of Securing Kubernetes Secrets

- How Kubernetes Secrets Work

- How Do You Store Sensitive Data in Kubernetes?

- How Do You Secure Secrets in Kubernetes?

- Challenges in Securing Kubernetes Secrets

- What Are the Best Practices to Make Kubernetes Secrets More Secure?

- What Tools Are Available to Secure Secrets in Kubernetes?

- Kubernetes Secrets FAQ

-

Kubernetes and Infrastructure as Code

- Infrastructure as Code in the Kubernetes Environment

- Understanding IaC

- IaC Security Is Key

- Kubernetes Host Infrastructure Security

- IAM Security for Kubernetes Clusters

- Container Registry and IaC Security

- Avoid Pulling “Latest” Container Images

- Avoid Privileged Containers and Escalation

- Isolate Pods at the Network Level

- Encrypt Internal Traffic

- Specifying Resource Limits

- Avoiding the Default Namespace

- Enable Audit Logging

- Securing Open-Source Kubernetes Components

- Kubernetes Security Across the DevOps Lifecycle

- Kubernetes and Infrastructure as Code FAQs

- What Is the Difference Between Dockers and Kubernetes?

- Securing Your Kubernetes Cluster: Kubernetes Best Practices and Strategies

-

What Is a Host Operating System (OS)?

- The Host Operating System (OS) Explained

- Host OS Selection

- Host OS Security

- Implement Industry-Standard Security Benchmarks

- Container Escape

- System-Level Security Features

- Patch Management and Vulnerability Management

- File System and Storage Security

- Host-Level Firewall Configuration and Security

- Logging, Monitoring, and Auditing

- Host OS Security FAQs

- What Is Docker?

- What Is Container Registry Security?

- What Is Containerization?

What Is a Container?

A container is a lightweight, portable, and self-sufficient unit that packages an application along with its dependencies, libraries, and runtime environment. Containers enable applications to run consistently across different computing environments, simplifying development, testing, and deployment processes. They isolate applications from the underlying system, ensuring that each application runs in a dedicated user space.

Containers use the host operating system's kernel but maintain their own file system, which contributes to their efficiency and ease of use. Popular container platforms include Docker and containerd, while orchestration tools like Kubernetes help manage and scale container deployments.

Containers Explained

A container is a stand-alone executable package that includes everything needed to run a piece of software, including the code, runtime, libraries, and system tools. Think of them as the shipping containers of the software world. They package the code and all its dependencies so that the application can run consistently across any computing environment. Security-wise, they offer isolation, limiting the blast radius in event of a security incident.

With faster startup times and lower resource consumption compared to virtual machines, containers also provide easier access to the host system's bare-metal hardware, which is beneficial for applications that utilize GPU processing, for example.

Figure 1: Container image

While the application or microservice inside a container is isolated from those in other containers on the same server, they still share some resources with the host operating system. This makes them resource efficient but only quasi-independent. Serving as a lightweight form of virtualization, containers offer a degree of abstraction and isolation unavailable when hosting applications directly on a server. But they’re not as isolated as virtual machines that have their own, completely independent operating system environment.

Video: Containers are one of the most exciting innovations in application development and cloud computing, but organizations looking to leverage containers need to know the best way to secure them.

Containers like Docker provide an extra layer of abstraction, making it easier to manage microservices and implement CI/CD pipelines.

Because containers abstract applications from the host operating system, it’s easy to deploy containers without applying special configurations to the host. No matter which operating system version you use or how it’s configured, you can deploy a container on it quickly and easily, provided the system supports the container runtime you’re using.

The Confluence: Agility Meets Security

When you integrate microservices, CI/CD, and containers, you create an ecosystem that greatly outperforms the sum of its parts. Microservices offer modular architecture, CI/CD promotes DevOps, and containers deliver portability and isolation. Together, they enable organizations to develop and deploy applications at unprecedented speed without compromising on security.

- For DevOps, the triad means a streamlined workflow, automated security checks, and a reduced attack surface.

- For AppSec, granularity simplifies vulnerability management and enhances security posture.

- For the C-suite, technology translates to business agility, reduced time-to-market, and robust security — key differentiators in a competitive marketplace.

By understanding and implementing this container-based triad, organizations position themselves for success in an increasingly complex and threat-laden landscape. When combined with container orchestration, these technologies enhance the management, coordination, and scalability of the application lifecycle with greater efficiency than has previously been possible.

But let’s back up and get up to speed on container basics.

Understanding Container Components

To effectively navigate container security, you should first become familiar with the fundamental components that constitute a container. These building blocks include container images, runtimes, and isolation mechanisms, each of which plays a unique role in the container ecosystem.

Container Images

Container images serve as the blueprint for containers, encapsulating the application code, libraries, and dependencies required for the application to run. Built from a series of layered file systems, these images are immutable, meaning they don't change once they’re created. When you instantiate a container, the runtime uses the image as a template, creating a writable layer atop the immutable layers. Security implications arise from outdated or vulnerable software packages within the image, making regular scanning and updates essential.

Container Runtimes

The container runtime is the software responsible for executing containers on the host operating system. In fact, containers, specifically the container runtime, has redefined the OS. Assuming the heavy lifting that previously fell to the OS, the container runtime has trimmed it to a container-optimized version comprising the essential components to provide access to physical resources and run the container runtime.

The runtime handles tasks such as image pulling, container orchestration, and lifecycle management. It also enforces security policies, like Linux Security Modules (LSM), and interacts with the host kernel to create isolation mechanisms. Popular runtimes include Docker, containerd, and CRI-O, each supported by Kubernetes.

Since its release in 2013, Docker stands out as a pivotal player in container runtimes, having become synonymous with containerization. At its core, Docker packages applications and their dependencies into virtual containers that run on any Linux, Windows, or macOS computer. This encapsulation isolates the application from its environment, reducing the risk of system-level vulnerabilities affecting the application or vice versa.

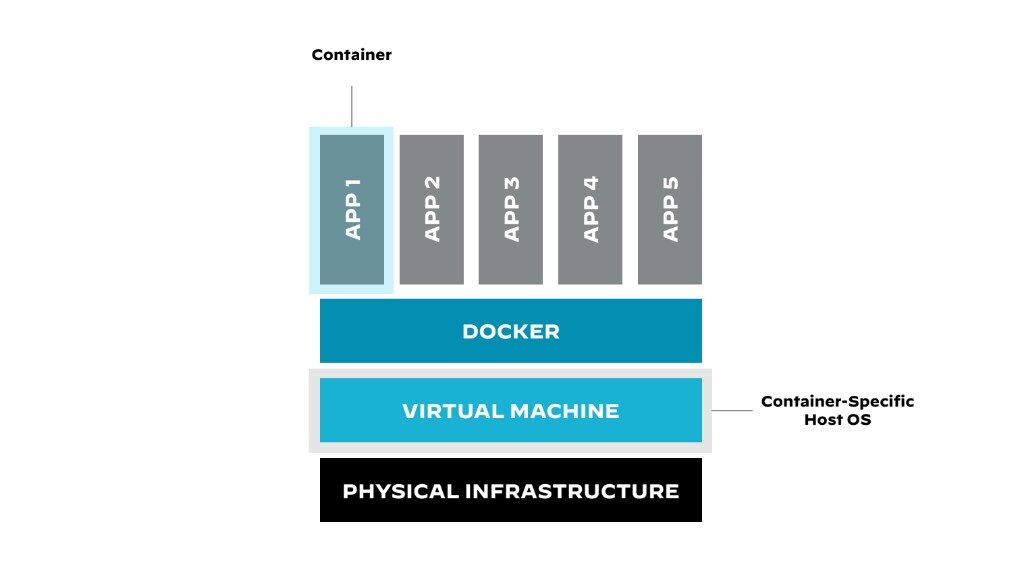

Figure 2: Container runtime deployment architecture within Docker engine

Docker Engine Mechanics

Figure 2 gives you an idea of how the Docker engine works. The client sends a request to the Docker engine to create a container, after which the Docker engine creates the container based on the instructions in the Dockerfile. The container is then started, and the application is run. Additionally, we see a representation of how the Docker engine interacts with the container registry. The Docker engine can pull images from the registry or push images to the registry.

Key Features of the Docker Engine

- Create images from Dockerfiles

- Create containers from images

- Manage containers, such as starting, stopping, and deleting them

- Interact with image registries to pull and push images

- Provide networking for containers

- Enhance security for containers

Isolation Mechanisms

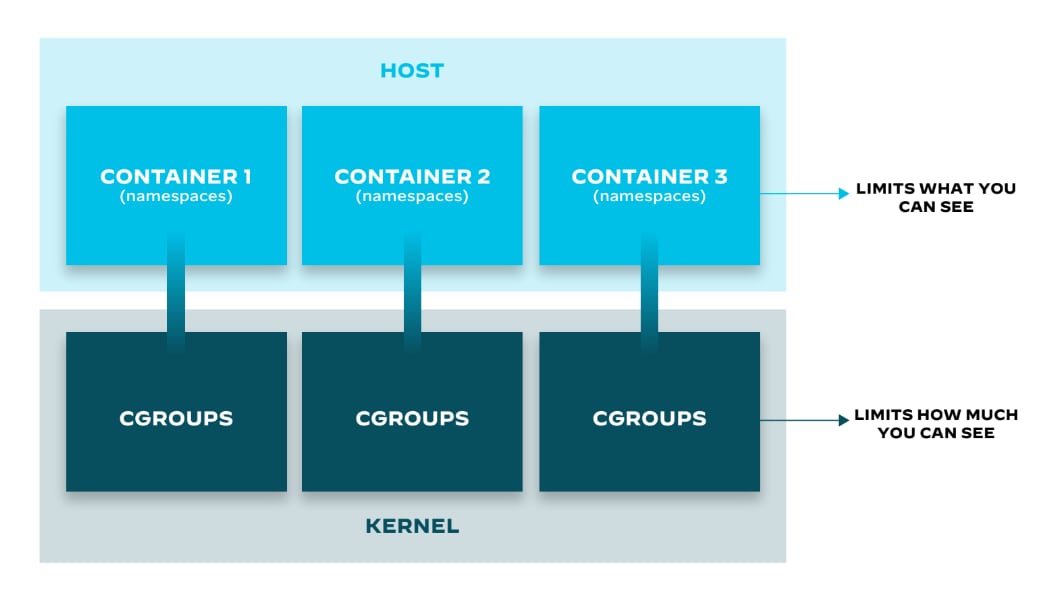

Isolation mechanisms are kernel-level features that segregate containers from each other and from the host system. Two primary mechanisms are namespaces and control groups (cgroups). Namespaces and cgroups provide the key Linux building blocks on which the container environment is built.

Docker uses both of these Linux kernel features, ensuring that each container operates within its confined environment with controlled access to system resources, which mitigates the risk of one container affecting another.

Namespaces

Namespaces offer isolation by partitioning system resources so that each container only sees its isolated view of the operating environment. For instance, PID namespaces isolate process IDs, while network namespaces isolate network interfaces and routing tables. Proper setup is critical, as misconfigurations in namespaces can lead to container breakout.

Linux namespaces create isolation for running processes, limiting their access to system resources without their awareness. A namespace generates a virtually isolated user space, granting an application dedicated system resources such as file system, network stack, process ID, and user ID. This abstraction allows each application to run independently, without interference from other applications on the same host.

The Linux kernel contains various types of namespaces, each with unique properties:

- A user namespace possesses its own set of user IDs and group IDs for assignment to processes. Notably, this allows a process to have root privilege within its user namespace without having it in other user namespaces.

- A process ID (PID) namespace assigns a unique set of PIDs to processes, separate from the set of PIDs in other namespaces. The first process created in a new namespace has PID 1, and child processes receive subsequent PIDs. If a child process is created with its own PID namespace, it has PID 1 in that namespace and its PID in the parent process's namespace.

- A network namespace contains an independent network stack, including a private routing table, set of IP addresses, socket listing, connection tracking table, firewall, and other network-related resources.

- A mount namespace features an independent list of mount points visible to the processes in the namespace. This enables mounting and unmounting file systems in a mount namespace without affecting the host file system.

- An interprocess communication (IPC) namespace has its own IPC resources, such as POSIX message queues.

- A UNIX Time-Sharing (UTS) namespace allows a single system to appear to have different host and domain names for different processes.

Control Groups (Cgroups)

Control Groups, commonly known as cgroups, limit and allocate resources like CPU, memory, and I/O to containers. They ensure that a single container doesn't monopolize system resources, which contributes to system stability and enhanced performance. Incorrect cgroup configurations can lead to resource starvation or denial-of-service attacks.

A cgroup is a Linux kernel feature that limits, isolates, and accounts for the resource usage (CPU, memory, disk I/O, network, etc.) of a collection of processes.

Figure 3: Namespace and cgroup architecture

Cgroups provide the following features:

Resource limits

Users can set a maximum limit on the amount of a specific resource (e.g., CPU, memory, disk I/O) that a process or group of processes within a cgroup can use. By setting resource limits, users can prevent a single process or group of processes from consuming too many resources and affecting other processes or the system as a whole.

Prioritization

Users can define the relative importance of processes or groups of processes within different cgroups to counter resource contention. Prioritization allows users to allocate a higher or lower share of a resource, such as CPU, disk, or network, to a specific cgroup compared to other cgroups. This helps ensure that critical processes receive resources needed to maintain performance, while less critical processes receive lower resource shares.

Accounting

Cgroups monitor and report the usage of resources, such as CPU, memory, disk I/O, and network, for a group of processes within a cgroup. This monitoring capability enables users to analyze application performance, identify bottlenecks, and optimize resource allocation based on real-time data.

Control

With Cgroups, users can efficiently manage the state of all processes within a cgroup using a single command. This control feature allows for processes to be frozen, stopped, or restarted as needed, providing granular control over process execution and improving overall system management.

By combining namespaces and cgroups, it’s possible to securely run multiple applications on a single host with each application residing in its isolated environment.

Container Infrastructure

By delving into container infrastructure, organizations can unlock opportunities to optimize application development, boost scalability, and fortify security — ultimately leading to significant cost savings and heightened efficiency.

Host Operating System

The host operating system serves as the foundation on which container runtimes operate. Typically, lightweight, minimal OS distributions are preferred to reduce the attack surface. The host OS is responsible for interacting with the hardware and providing system resources to containers. Hardening the host OS, applying security patches, and maintaining minimal privileges are key to enhancing container security.

Container Registries

To make it easy to share container images and manage different image versions of the same application, teams typically use container registries. Container registries are repositories for storing and distributing container images. They play a pivotal role in the CI/CD pipeline, serving as the source from which runtimes pull images.

Private Container Registries

Enterprises often use private registries to store proprietary or sensitive images. These registries typically reside behind a firewall with restricted access. Common security measures include image signing, vulnerability scanning, and role-based access control.

Organizations can host container registries remotely or on-premises, either by creating and deploying their own registry or using a commercially supported private registry service.

Public Container Registries

Offering a vast array of community-contributed images, public registries like Docker Hub are great for individuals and small teams looking to get up and running as quickly as possible. While convenient, they present security risk due to potentially unvetted or malicious images. Always verify the provenance and integrity of images pulled from public sources.

Know Your Container Types

Containers come in various flavors, each designed to meet specific needs in the software development lifecycle. Understanding these types is crucial for implementing appropriate security controls.

Application Containers

Application containers, such as those orchestrated by Docker or Kubernetes, are designed to package and run a single service or application. They're ephemeral, allowing for easy stopping, starting, or replacing without affecting other containers. Application containers package the code that developers work on and can include any combination of coding solutions like LAMP stack (Linux, Apache, MySQL, and PHP), MEAN stack (MongoDB, Express.js, AngularJS, Node.js), or other application development platform. By running specific tasks and having a minimal OS with only the essential libraries, they effectively reduce their attack surface.

Figure 4: Containerized application deployed on a physical infrastructure

Operating System (OS) Containers

OS containers differ from application containers in that they function more like lightweight virtual machines. They contain full operating systems and can run multiple services and applications. Due to their complexity, OS containers present a larger attack surface, making it crucial to harden the contained OS, just as one would with a standard operating system. It's also important to segregate sensitive workloads to minimize the impact of a security incident, should one occur.

OS containers share a host kernel and execute a full init system, like other containers. In Unix-based computer operating systems, init (short for initialization) is the first process or daemon started during the system boot and runs until the system shuts down. This characteristic enables the container to run multiple processes, essentially functioning like a full virtual machine (e.g., LXC and LXD).

Figure 5: Docker-created images running on the host operating system while a VM runs

on top of the OS

Running systemd inside a container (Docker or Open Container Initiative [OCI]) can approximate an OS container. This approach allows developers to treat the container like an OS and install software using standard methods (e.g., yum, apt-get). A developer could leverage this approach to quickly migrate existing applications to a cloud-native form, providing an interim path to microservices while developing true cloud-native versions of the application in parallel.

A container-optimized OS (COS) serves as the base layer of the cloud-native stack, providing access to physical resources. When deploying on private infrastructure, minimum hardware requirements are associated with the OS. Check with the orchestration vendor to determine necessary resources. Most vendors support both Linux and Windows-based OS, depending on the application.

Since the core infrastructure component is often built on a Linux-based kernel, it's essential to evaluate certain services and functions. Both OS hardening and security software must be factored into the build to protect the hosted containers and microservices from potential threats.

Figure 6: Containerized application deployed to a Kubernetes cluster

Super Privileged Containers

Super privileged containers (SPCs) run with elevated privileges and have broader access to system resources and services. Administrators typically employ them for tasks such as monitoring, logging, and backup. Though powerful, SPCs pose a significant security risk if compromised, as they can provide an attacker with extensive control over the host and other containers. To mitigate risk, limit their use to essential operations and employ strict access controls and auditing to monitor activity.

Constructing container infrastructure on dedicated container hosts in a private or public cloud requires careful administration throughout the application lifecycle. SPCs serve as powerful development tools, whether used alongside container orchestration systems like Kubernetes, OpenShift, or GCP Anthos, or with standalone container hosts. SPCs can perform tasks such as using loadable kernel modules (LKMs) for debugging and analysis, extending the running kernel and proving valuable for container development and debugging.

Figure 7: Super privileged container created using Docker deployed to the host machine,

which can access all the resources on the host machine

Because a closer interdependence exists between SPCs and the host kernel, administrators must carefully select the host OS. This selection becomes more crucial in large clustered or distributed environments (e.g., hybrid cloud) where communication is complex and troubleshooting proves challenging.

Harnessing the Efficiency of Containerization

Containers offer myriad benefits in the world of application development and deployment. Consider just a few compelling use cases that demonstrate how your organization can leverage container technology to streamline processes, optimize resources, and enhance application performance and resilience.

- Microservices architecture: Containers are well suited for deploying and managing microservices, which allows for faster development cycles, easy scaling, and improved fault isolation.

- Continuous integration and deployment (CI/CD): Containers support DevOps initiatives by streamlining the CI/CD process. They ensure that software is built, tested, and deployed in a consistent and reproducible environment, promoting rapid iteration and quick deployment while reducing the risk of environment-specific issues.

- Application isolation: Containers provide an additional layer of security by isolating applications and their dependencies into separate, sandboxed environments. This helps prevent potential security vulnerabilities in one application from impacting other applications or the host system.

- Multicloud and hybrid deployments: Containerization increases flexibility and avoids vendor lock-in by enabling organizations to deploy applications across multiple cloud providers or combine on-premises and cloud resources.

- Development and testing: Developers can use containers to create identical environments across their local machines and testing environments, ensuring that the application behaves consistently throughout the development process.

- Legacy application modernization: Containers can help modernize legacy applications by making them more portable, easier to maintain, and better suited for cloud-native environments.

- Resource optimization: Containers enable better resource utilization by allowing multiple applications to share the same host operating system, reducing the overhead associated with running multiple virtual machines.

- Application scaling and orchestration: Containers make it easier to scale applications in response to changing workloads and user demands. Container orchestration platforms like Kubernetes can automatically manage scaling based on resource usage or other performance metrics, helping to maintain application performance without manual intervention.

- Load balancing and high availability: Containers make it easier to deploy and manage load balancers and high availability solutions, ensuring that applications can handle fluctuations in user traffic and remain accessible even during hardware or software failures.

- Serverless computing: Organizations can use containers as the underlying technology for serverless platforms like AWS Lambda, Google Cloud Functions, and Azure Functions. In this model, developers write and deploy code as isolated functions automatically executed in response to events — without having to manage the underlying infrastructure.

- Edge computing: Containers can be deployed on edge devices, such as IoT devices or content delivery network (CDN) nodes, enabling efficient processing and analysis of data closer to its source.

- Batch processing and data pipelines: Organizations use containers to create and manage data processing pipelines for tasks like data ingestion, transformation, and analysis. Doing so ensures that each stage of the pipeline runs in a consistent environment and can scale as needed.

- Machine learning and AI: Containers help manage the complex dependencies and environments required for machine learning and AI applications. They make it easier to deploy and scale models, ensuring that training and inference workloads execute in a consistent environment.

- Development and collaboration: DevOps teams can use containers to create shareable development environments, enabling engineers to work on the same project with consistent configurations and dependencies. This reduces "works on my machine" issues and simplifies onboarding for new team members.

- Experimentation and A/B testing: Containers can be used to deploy multiple versions of an application, making it easier to conduct A/B testing, canary releases, and other types of experiments.

- Application migration: Containers help with migrating applications between on-premises, cloud, and hybrid environments. Packaging the application and its dependencies eliminates the need to make modifications in the target environment.

- Distribution: Organizations can use containers to package and distribute software, ensuring that users have access to the latest version of an application and its dependencies in a consistent, reproducible format.

- Cross-platform development: Containers make it easier to develop and test applications on multiple operating systems and platforms, ensuring compatibility and consistent behavior. This is particularly useful for teams working with diverse technology stacks or targeting multiple platforms.

- Database and stateful applications: While containers are often associated with stateless applications, they can also be used for stateful applications like databases. Using containers for databases can simplify deployment, versioning, and migration, while maintaining data persistence and durability.

- Disaster recovery and backup: Organizations utilize containers to create portable and easily replicable environments for disaster recovery and data backup purposes. By encapsulating applications and their dependencies in containers, organizations can recreate their infrastructure and applications in the event of a disaster or system failure, reducing downtime and data loss risks.

Exploring these and other use cases will help you to envision how your organization can harness the advantages of containers to advance your business outcomes.

Container Security

Containers simplify application deployment and offer fast startup times, but they also introduce a new layer to your software environment that requires security measures. To ensure optimal container security, it's crucial to scan container images for malware, implement strict access controls for container registries, and utilize container runtime security tools to address potential vulnerabilities that may arise while a container is in operation.

Container FAQs

A container image serves as a standalone, executable software package that includes everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings. It differs from a container in that the image is a static file, whereas a container is a runtime instance of the image.

When an image is executed, it runs as a container, which is an instance of the image in a runtime environment. The container image ensures consistency across multiple deployments, guaranteeing that software runs the same way, regardless of where it's deployed. This distinction is crucial in cloud environments, where consistency and portability are key.

Knative is an open-source Kubernetes-based platform designed to develop, deploy, and manage serverless and event-driven applications. It simplifies the complexities of building and running cloud-native applications by providing mechanisms for orchestrating source-to-container workflows, routing and managing traffic during deployment, auto-scaling, and binding running services to event ecosystems.

Knative works by extending Kubernetes and Istio, offering a set of middleware components essential for building modern, source-centric, and container-based applications that can run anywhere.

Container networking involves the interconnection of containers across multiple host systems, enabling them to communicate with each other and with external networks. It includes configuring network interfaces, IP addresses, DNS settings, and routing rules. Container networking must ensure isolation and security while maintaining efficient communication pathways.

Advanced container networking involves overlay networks, network namespaces, and software-defined networking (SDN) to create scalable and secure network architectures. These technologies are essential where high availability, scalability, and security are paramount.

A service mesh is a dedicated infrastructure layer for handling service-to-service communication in microservices architectures, typically implemented as a set of lightweight network proxies. It provides a way to control how different parts of an application share data and resources. Key features include service discovery, load balancing, encryption, observability, traceability, and authentication and authorization.

A service mesh ensures that communication among containerized application services is fast, reliable, and secure. It abstracts the complexity of these operations from the application developers, allowing them to focus on business logic. Service meshes are increasingly important in cloud-native environments for enhancing the performance, reliability, and security of microservices.