- What Is Container Security?

-

Managing Permissions with Kubernetes RBAC

- Kubernetes RBAC Defined

- Why Is RBAC Important for Kubernetes Security?

- RBAC Roles and Permissions in Kubernetes

- How Kubernetes RBAC Works

- The Role of RBAC in Kubernetes Authorization

- Common RBAC Permissions Risks and Vulnerabilities

- Kubernetes RBAC Best Practices and Recommendations

- Kubernetes and RBAC FAQ

- Kubernetes: How to Implement AI-Powered Security

- What Is Container Runtime Security?

- What Is Kubernetes Security?

-

Multicloud Management with Al and Kubernetes

- Multicloud Kubernetes Defined

- How Does Kubernetes Facilitate Multicloud Management?

- Multicloud Management Using AI and Kubernetes

- Key AI and Kubernetes Capabilities

- Strategic Planning for Multicloud Management

- Steps to Manage Multiple Cloud Environments with AI and Kubernetes

- Multicloud Management Challenges

- Kubernetes Multicloud Management with AI FAQs

-

What Is Kubernetes?

- Kubernetes Explained

- Kubernetes Architecture

- Nodes: The Foundation

- Clusters

- Pods: The Basic Units of Deployment

- Kubelet

- Services: Networking in Kubernetes

- Volumes: Handling Persistent Storage

- Deployments in Kubernetes

- Kubernetes Automation and Capabilities

- Benefits of Kubernetes

- Kubernetes Vs. Docker

- Kubernetes FAQs

-

What Is Kubernetes Security Posture Management (KSPM)?

- Kubernetes Security Posture Management Explained

- What Is the Importance of KSPM?

- KSPM & the Four Cs

- Vulnerabilities Addressed with Kubernetes Security Posture Management

- How Does Kubernetes Security Posture Management Work?

- What Are the Key Components and Functions of an Effective KSPM Solution?

- KSPM Vs. CSPM

- Best Practices for KSPM

- KSPM Use Cases

- Kubernetes Security Posture Management (KSPM) FAQs

- What Is Orchestration Security?

- What Is Container Orchestration?

-

How to Secure Kubernetes Secrets and Sensitive Data

- Kubernetes Secrets Explained

- Importance of Securing Kubernetes Secrets

- How Kubernetes Secrets Work

- How Do You Store Sensitive Data in Kubernetes?

- How Do You Secure Secrets in Kubernetes?

- Challenges in Securing Kubernetes Secrets

- What Are the Best Practices to Make Kubernetes Secrets More Secure?

- What Tools Are Available to Secure Secrets in Kubernetes?

- Kubernetes Secrets FAQ

- What Is the Difference Between Dockers and Kubernetes?

- Securing Your Kubernetes Cluster: Kubernetes Best Practices and Strategies

-

What Is a Host Operating System (OS)?

- The Host Operating System (OS) Explained

- Host OS Selection

- Host OS Security

- Implement Industry-Standard Security Benchmarks

- Container Escape

- System-Level Security Features

- Patch Management and Vulnerability Management

- File System and Storage Security

- Host-Level Firewall Configuration and Security

- Logging, Monitoring, and Auditing

- Host OS Security FAQs

- What Is Docker?

- What Is Container Registry Security?

- What Is a Container?

- What Is Containerization?

Kubernetes and Infrastructure as Code

Kubernetes and infrastructure as code (IaC) are closely related concepts in the modern DevOps landscape. Kubernetes is a powerful container orchestration platform, while IaC refers to managing and provisioning infrastructure through machine-readable definition files. In a Kubernetes environment, IaC enables developers and operators to define, version, and manage Kubernetes resources — such as deployments, services, and configurations — using declarative languages like YAML or JSON.

Tools like Helm, Kustomize, and Terraform facilitate IaC in Kubernetes, streamlining the application lifecycle and ensuring consistency across development, testing, and production environments. By embracing IaC principles, Kubernetes users can enjoy improved collaboration, enhanced security, and reduced operational overhead, making it easier to build, deploy, and scale containerized applications.

Infrastructure as Code in the Kubernetes Environment

Manually provisioning Kubernetes clusters and their add-ons is time-consuming and error-prone. That’s where infrastructure as code (IaC) comes in. IaC has revolutionized the way engineers configure and manage infrastructure in Kubernetes deployments. By automating traditional manual processes, IaC enables the programmatic creation and management of container orchestration configurations, making infrastructure management more efficient, secure, and predictable.

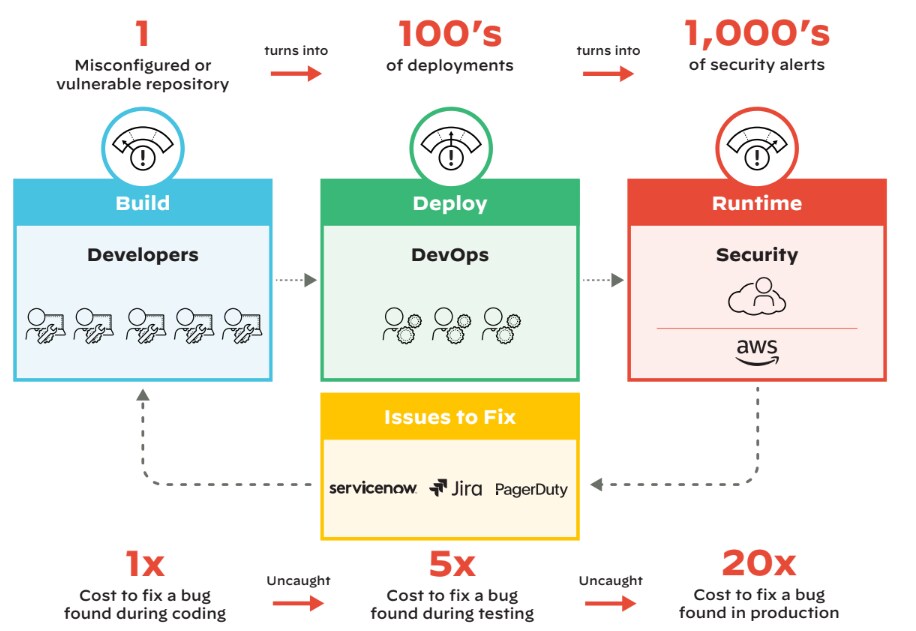

That said, while infrastructure as code streamlines Kubernetes infrastructure deployment, it also poses security risks if not implemented correctly. Misconfigurations in IaC can lead to security vulnerabilities across all new containers and Kubernetes resources. This probability underscores the critical role IaC security plays in containerized environments.

Figure 1: Misconfiguration snowball effect, as one misconfiguration cascades into hundreds of alerts

By regularly scanning IaC for security issues, organizations can detect and prevent misconfigurations that might expose containerized applications and Kubernetes clusters to attacks. Shifting security left by scanning IaC before the build stage minimizes the potential impact of security misconfigurations.

Understanding IaC

Think of infrastructure as code as a modern approach to managing and provisioning IT infrastructure. With IaC, an infrastructure's configuration, deployment, and management are defined in human-readable, machine-executable files, typically written in a domain-specific language or descriptive markup language like YAML or JSON.

IaC enables developers and system administrators to treat infrastructure similar to how they treat application code. With IaC, they can version, test, and reuse infrastructure configurations in a systematic and repeatable manner, improving efficiency, reducing manual errors, and ensuring consistency across environments.

A key component of DevOps practices, IaC is often used with tools like Terraform, Ansible, Puppet, Chef, and Kubernetes to automate the provisioning and management of cloud resources (e.g., data servers, storage, and networks), in addition to virtual machines and container orchestration. It enables faster deployment of applications and infrastructure, seamless scaling, and improved collaboration among teams.

Kubernetes manifest files are an example of IaC. Written in JSON or YAML format, manifests specify the desired state of an object that Kubernetes will maintain when you apply the manifest. Additionally, packaged manifests such as Helm charts and Kustomize files simplify Kubernetes further by reducing complexity and duplication

Kubernetes security is perhaps the biggest advantage of IaC, in that it enables you to scan earlier in the development lifecycle to catch easy-to-miss misconfigurations before they deploy.

IaC Security Is Key

Automated and continuous IaC scanning is a key component of DevSecOps and is crucial for securing cloud-native apps.

When implementing DevSecOps for securing cloud-native applications, it’s essential to understand basic Kubernetes infrastructure pitfalls and how IaC can help mitigate common security errors and misconfigurations:

- Leaving host infrastructure vulnerable

- Granting overly permissive access to clusters and registries

- Running containers in privileged mode and allowing privilege escalation

- Pulling “latest” container images

- Failing to isolate pods and encrypt internal traffic

- Forgetting to specify resource limits and enable audit logging

- Using the default namespace

- Incorporating insecure open-source components

Kubernetes Host Infrastructure Security

Let’s start at the most basic layer of a Kubernetes environment: the host infrastructure. This is the bare metal and/or virtual server that serves as Kubernetes nodes. Securing this infrastructure starts with ensuring that each node (whether it’s a worker or a master) is hardened against security risks. An easy way to do this is to provision each node using IaC templates that enforce security best practices at the configuration and operating system level.

When you write your IaC templates, ensure that the image template and the startup script for your nodes are configured to run only the strictly necessary software to serve as nodes. Extraneous libraries, packages, and services should be excluded. You may also want to provision nodes with a kernel-level security hardening framework, as well as employ basic hygiene like encrypting any attached storage.

IAM Security for Kubernetes Clusters

In addition to managing internal access controls within clusters using Kubernetes RBAC and depending on where and how you run Kubernetes, you may use an external cloud service to manage access controls for your Kubernetes environment. For example, with Amazon EKS, you’ll use AWS IAM to grant varying access levels to Kubernetes clusters based on individual users’ needs.

Using a least-privileged approach to manage IAM roles and policies in IaC minimizes the risk of manual configuration errors that could grant overly permissive access to the wrong user or service. You can also scan your configurations with IaC scanning tools (such as the open-source tool Checkov) to automatically catch overly permissive or unused IAM roles and policies.

Container Registry and IaC Security

Although they’re not part of native Kubernetes, container registries are widely used as part of a Kubernetes-based application deployment pipeline to store and host the images deployed into a Kubernetes environment. Access control frameworks vary between container registries. With some, you can manage access via public cloud IAM frameworks. Regardless, you can typically define, apply, and manage them using IaC.

In doing so, you’ll want to ensure that container images are only accessible by registry users who need to access them. You should also prevent unauthorized users from uploading images to a registry, as insecure registries are an excellent way for threat actors to push malicious images into your environment.

Avoid Pulling “Latest” Container Images

When you tell Kubernetes to pull an image from a container registry, it will automatically pull the version of the specified image labeled with the “latest” tag in the registry.

This may seem logical — after all, you typically want the latest version of an application. It can be risky from a security perspective, though, because relying on the “latest” tag makes it more difficult to track the specific version of a container you’re using. In turn, you may not know whether your containers are subject to security vulnerabilities or image-poisoning attacks that impact specific images on an application.

To avoid this mistake, specify image versions, or better, the image manifest when pulling images. You then want to audit your Kubernetes configurations to detect instances that lack specific version selection for images. It’s a little more work, but it’s worth it from a Kubernetes security perspective.

Avoid Privileged Containers and Escalation

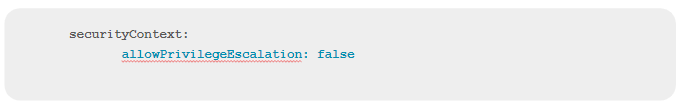

At the container level, a critical security consideration is ensuring that containers can’t run in privileged mode. Running containers in privileged mode — which gives them unfettered access to host-level resources — grants too much power. Proceed cautiously, as it’s an easy mistake to make.

Disallowing privilege escalation is easy to do using IaC. Simply write a security context that denies privilege escalation and make sure to include the context when defining a pod.

Then, ensure that privilege isn’t granted directly with the “privilege” flag or by granting CAP_SYS_ADMIN. Here again, you can use IaC scanning tools to check for the absence of this security context and to catch any other privilege escalation settings within pod settings.

Isolate Pods at the Network Level

By default, any pod running in Kubernetes can talk to any other pod over the network. That’s why, unless your pods actually need to talk to each other (which is usually the case only if they’re part of a related workload), you should isolate them to achieve a higher level of segmentation between workloads.

As long as you have a Kubernetes networking layer that supports network policies (some Kubernetes distributions default to CNIs, lacking this support), you can write a network policy to specify which other pods the selected pod can connect to for both ingress and egress. The value of defining all this in code is that you can scan the code to check for configuration mistakes or oversights that may grant more network access than intended.

Encrypt Internal Traffic

Another small but critical setting is the --kubelet-https=... flag. It should be set to “true.” If it’s not, traffic between your API server and Kubelets won’t be encrypted.

Omitting the setting entirely also usually means that traffic will be encrypted by default. But because this behavior could vary depending on which Kubernetes version and distribution you use, it’s a best practice to explicitly require Kubelet encryption, at least until the Kubernetes developers encrypt traffic universally by default.

Specifying Resource Limits

You may think of resource limits in Kubernetes as a way to control infrastructure costs and prevent “noisy neighbor” issues between pods by restricting how much memory and CPU a pod can consume. In addition, resource limits help to mitigate the risk of denial-of-service (DoS) and similar cyber attacks. A compromised pod can do more damage when it can suck up the entire cluster’s resources, depriving other workloads of functioning properly. From a security perspective, then, it’s a good idea to define resource limits.

Avoiding the Default Namespace

By default, every Kubernetes cluster contains a namespace named “default.” As the name implies, the default namespace is where workloads will reside by default unless you create other namespaces.

Using the default namespace presents two security concerns. First, your namespace is a significant configuration value, and if it’s publicly known, it’s that much easier for attackers to exploit your environment. The other, more substantial concern with default namespaces is that if everything runs there, your workloads aren’t segmented. It’s better to create separate namespaces for separate workloads, making it harder for a breach against one workload to escalate into a cluster-wide issue.

To avoid these issues, create new namespaces using kubectl or define them in a YAML file. You can also scan existing YAML files to detect instances where workloads are configured to run in the default namespace.

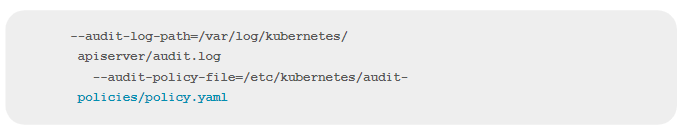

Enable Audit Logging

If a security incident (or, for that matter, a performance incident) does occur, Kubernetes audit logs tend to help when researching it. This is because audit logs record every request to the Kubernetes API server and its outcome. Unfortunately, audit logs aren’t enabled by default in most Kubernetes distributions. To turn this feature on, add lines like these to your kube-apiserver policy file to tell Kubernetes where to store audit logs and where to find the policy file that configures what to audit:

Because audit logs offer critical security information, it’s worth including a rule in your Kubernetes configuration scans to check whether audit logs are enabled.

Securing Open-Source Kubernetes Components

Leveraging open-source components, such as container images and Helm charts allows developers to move fast without reinventing the wheel. Open-source components, however, are not typically secure-by-default, so you should never assume a container image or Helm chart is secure.

Our research shows that around half of all open-source Helm charts within Artifact Hub contained misconfigurations, highlighting the gap between how and where Kubernetes security is being addressed.

Before using open-source Helm charts from Artifact Hub, GitHub, or elsewhere, you should scan them for misconfigurations. Similarly, before deploying container images, you should scan them using tools to identify vulnerable components within them. Identifying security risks within Kubernetes before putting them into production is crucial, especially when it comes to integrating open-source components into your environment.

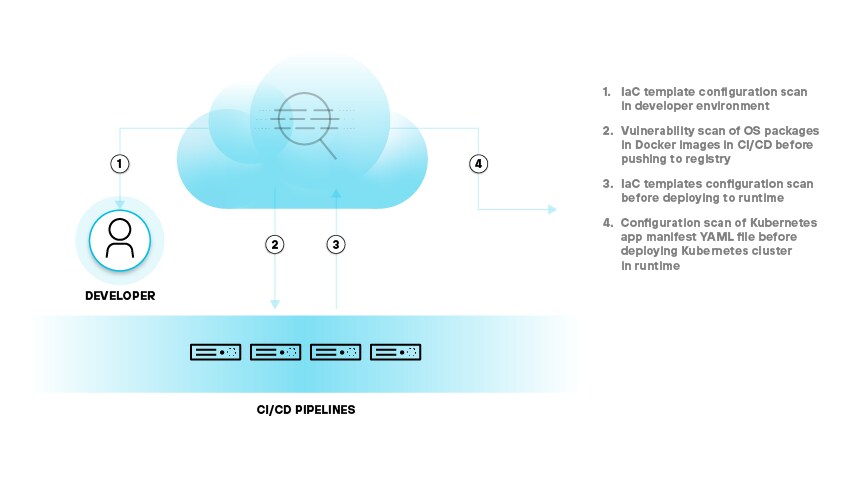

Kubernetes Security Across the DevOps Lifecycle

Identifying common Kubernetes security issues early is crucial to building a repeatable and efficient DevSecOps strategy as feedback cycles are faster and cheaper. Managing Kubernetes security risks at every stage is also key to securing cloud-native applications as they become more representative throughout the lifecycle.

Figure 2: Security throughout the container lifecycle, from a potentially vulnerable image to a secure runtime environment

Development

During the development stage, engineers are writing code for applications and infrastructure that will later be deployed into Kubernetes. Three main types of security flaws to avoid in this phase:

- Misconfigurations within IaC files

- Vulnerabilities in container images

- Hard-coded Kubernetes secrets

You can check for misconfigurations using IaC scanners, which can be deployed from the command line or via IDE extensions. IaC scanners work by identifying missing or incorrect security configurations that may later lead to security risks when the files are applied.

Container scanning checks for vulnerabilities inside container images. You can run scanners on individual images directly from the command line. But most container registries also feature built-in scanners. The major limitation of container image scanning tools is that they can only detect known vulnerabilities — meaning those that have been discovered and recorded in public vulnerability databases.

When scanning container images, keep in mind that container scanners designed to validate the security of container images alone may not be capable of detecting risks that are external to container images. That’s why you should also be sure to scan Kubernetes manifests and any other files that are associated with your containers. Along similar lines, if you produce Helm charts as part of your build process, you’ll need security scanning tools that can scan Helm charts.

In addition to avoiding misconfigurations and vulnerabilities, it’s important to refrain from hard-coding secrets, such as passwords and API keys, that threat actors can leverage to gain privileged access. Secrets scanning tools are key to checking for sensitive data like passwords and access keys inside source code. They can also be used to check for secret data in configuration files that developers write to govern how their application will behave once it’s compiled and deployed.

The key to getting feedback in this stage is to integrate with developers’ local tools and workflows — either via integrated development environments (IDEs) or command lines. As code gets integrated into shared repositories, it’s also important to have guardrails in place that allow for team-wide feedback to collaboratively address.

Build and Deploy

After code is written for new features or updates, it gets passed on to the build and deploy stages. This is where code is compiled, packaged, and tested.

Security risks like over-privileged containers, insecure RBAC policies, or insecure networking configurations can arise when you deploy applications into production. These insecure configurations may be baked into the binaries themselves, but they could also originate from Kubernetes manifests that are created alongside the binaries to prepare the binaries for the deploy stage.

Due to the risk of introducing new security problems while making changes, it’s best to run another set of scans on your application and any related configuration or IaC files prior to the actual deployment.

Takeaway: Integrating security checks into your CI/CD pipeline is the most consistent way to get security coverage on each Kubernetes deployment. Doing so automatically is the one way to get continuous security coverage and address issues before they affect users in production.

Container Runtime

On top of scanning configuration rules, you should also take steps to secure the Kubernetes runtime environment by hardening and monitoring the nodes that host your cluster. Kernel-level security frameworks like AppArmor and SELinux can reduce the risk of successful attacks that exploit a vulnerability within the operating systems running on nodes. Monitoring operating system logs and processes can also allow you to detect signs of a breach from within the OS. Security information and event management (SIEM) and security orchestration, automation, and response (SOAR) platforms are helpful for monitoring your runtime environment.

IaC can be used to deploy trusted container runtimes, such as Docker or CRI-O. It can also be used to configure container runtimes with security features such as sandboxing and resource isolation.

Identify Benchmark KPIs

Start thinking about KPIs and set benchmarks, such as your baseline number of violations, to get the complete picture of how your program is performing so far:

- Assess your current runtime issues. Your ultimate goal is to reduce the number of runtime issues your team faces.

- Measure leading indicators of runtime issues — the posture of your code, for example. To establish your baseline code posture, break down your violations by severity level and identify the average number of new issues you see each month.

- Set goals to work toward, such as reducing new monthly violations by a specific percentage, or just track your progress over time.

If you’re starting to see the rate of new misconfigurations and vulnerabilities decrease, that’s a great indication that your team is addressing misconfigurations earlier and your policy library is fine-tuned to your needs.

Feedback and Planning

The DevOps lifecycle is a continuous loop in the sense that data collected from runtime environments should be used to inform the next round of application updates.

From a security perspective, this means that you should carefully log data about security issues that arise within production, or, for that matter, at any earlier stage of the lifecycle, and then find ways to prevent similar issues from recurring in the future.

For instance, if you determine that developers are making configuration changes between the testing and deployment stages that lead to unforeseen security risks, you may want to establish rules for your team that prevent these changes. Or, if you need a more aggressive stance, you can use access controls to restrict who’s able to modify application deployments. The fewer people who have the ability to modify configuration data, the lower the risk of changes that introduce vulnerabilities.

Kubernetes and Infrastructure as Code FAQs

Integrated development environments (IDEs) are software applications that provide a comprehensive set of tools and features to facilitate the process of software development. IDEs combine various tools used by developers, such as code editors, compilers, debuggers, and build automation utilities, into a single unified interface, streamlining the development workflow and enhancing productivity.

In the context of container orchestration, IDEs can aid in streamlining the development, deployment, and management of containerized applications. Modern IDEs have evolved to include support for containerization technologies like Docker and Kubernetes, enabling developers to work with containers and orchestration platforms more efficiently.

Key Features of IDEs in Container Orchestration Environments

Container support: IDEs often include built-in support for working with container technologies like Docker. They may provide features like Dockerfile syntax highlighting, autocompletion, and the ability to build, run, and manage Docker containers directly from the IDE.

Kubernetes integration: Some IDEs offer integration with Kubernetes, allowing developers to manage Kubernetes clusters, deploy applications, and debug containerized applications running in a Kubernetes environment. Features like Kubernetes resource template support, cluster management, and log streaming can simplify the process of working with Kubernetes.

Orchestration platform-specific extensions and plugins: Many IDEs support extensions and plugins that can further enhance container orchestration capabilities. These plugins can provide additional functionality, such as deploying applications to a specific orchestration platform, managing container registries, or working with platform-specific resources and configurations.

Version control integration: IDEs often integrate with version control systems like Git, enabling developers to manage their containerized application codebase, track changes, and collaborate with other team members more effectively.

Integrated testing and debugging tools: IDEs can include tools for testing and debugging containerized applications, enabling developers to run tests, identify issues, and debug their code within the context of containers and orchestration platforms.

CI/CD pipeline integration: Some IDEs can integrate with continuous integration and continuous deployment (CI/CD) tools, such as Jenkins, GitLab CI, or GitHub Actions, allowing developers to automate the build, test, and deployment of their containerized applications.

Customizability and extensibility: IDEs can be tailored to the specific needs of container orchestration workflows through customization and extensibility options. Developers can configure the IDE to match their preferred development environment and extend its capabilities with plugins or extensions that simplify working with container orchestration platforms.

Kustomize is a standalone tool for Kubernetes that allows you to customize raw, template-free YAML files for multiple purposes, without using any additional templating languages or complex scripting. It’s specifically designed to work natively with Kubernetes configuration files, making it easy to manage and maintain application configurations across different environments, such as development, staging, and production. Kustomize introduces a few key concepts:

Base is a directory containing the original, unmodified Kubernetes manifests for an application or set of related resources.

Overlay is a directory containing one or more Kustomize files that describe how to modify a base to suit a specific environment or use case.

Kustomize file (kustomization.yaml or kustomization.yml) is the central configuration file used by Kustomize to describe the desired modifications to the base resources.

Kustomize files are essentially configuration files that define how to modify the base Kubernetes YAML manifests. Used by Kustomize to describe the desired modifications to the base resources, they typically include instructions like adding common labels or annotations, changing environment-specific configurations, and generating or patching secrets and config maps.

Common Directives Used in Kustomize Files

resources: List of files or directories to include as base resources.

patches: List of patch files that modify the base resources.

configMapGenerator: Generates a ConfigMap from a file, literal, or directory.

secretGenerator: Generates a Secret from a file, literal, or command.

commonLabels: Adds common labels to all resources and selectors.

commonAnnotations: Adds common annotations to all resources.